Introduction

Large language models, or LLMs, are a type of AI that can mimic human intelligence. They use statistical models to analyze vast amounts of data, learning the patterns and connections between words and phrases.

AllegroGraph supports integration with Large Language Models.

A Vector Database organizes data through high-dimensional vectors. It is created by taking fragments of text, sending them to the Large Language Model (like OpenAI's GPT), and receiving back embedding vectors suitable for storing in AllegroGraph.

Four aspects of LLM

The supported vendors and models: see Supported models below.

Keyword syntax: using Keyword Syntax for Magic Predicates allows simplified and more readable calls to the supported predicates.

The selector argument (when the predicate uses vector database) allows reducing what is seached, see the Using the ?selector and ?useClustering arguments with LLM Magic Predicates document.

Clustering to speed up vector matching (again, when the predicate uses vector database). see the Using the ?selector and ?useClustering arguments with LLM Magic Predicates document.

Supported vendors and models

Currently the supported LLM venders are OpenAI GPT (the default option for chat and embedder models) and Ollama.

OpenAI

OpenAI is a vendor described at OpenAI GPT. OpenAI requires an API key.

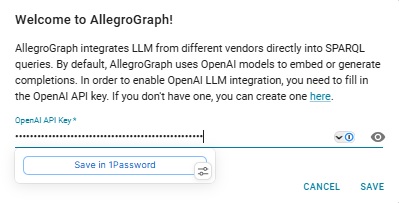

Starting in release 8.3.1, admin users (see the Managing Users document) can specify an OpenAI key in AG WebView by navigating to the webview/welcome/ page (that is [server:port]/webview/welcome/). Doing so will cause this dialog to be displayed:

Admin users can then fill in the OpenAI key (already done in the illustration) and click Save. The key will be used whenever it is required.

(Note the webview/welcome page was introduced in version 8.3.1. In version 8.3.0, OpenAI keys were associated with individual vector repos and with individual SPARQL queries, with a PRFIX) rather than at once. There are many places in the documentation when you are told how to specify the OpenAPI key, and those instructions can be ignored if an admin user specified it on the webview/welcome page. But they will all still work and if an admin users has not set things globally as shown here, all users must specify the key when required.)

The WebView tool supports an LLM Playground where you can try out LLM queries which require the OpenAI API key. That tool makes it very easy to experiment with such LLM queries.

OpenAI has various models, including

- gpt-3.5-turbo

- gpt-4

- gpt-4o

- gpt-4-turbo

The default model for the llm:response, llm:askMyDocuments, and llm:askForTable magic properties (see Use of Magic predicates and functions) is gpt-4. You can select a different OpenAI model, for example gpt-3.5-turbo, by placing this form into the Allegraph initfile:

(setf gpt::*openai-default-ask-chat-model* "gpt-3.5-turbo")

Ollama

Ollama does not require a key but does require an entry in the AllegroGraph configuration as file described here in the Server Configuration and Control document. See the Ollama document for more information.

SERP

We also use SERP API, which is a service that returns Google and other search results through an API. It is used in conjuction with LLM APIs to validate LLM results (i.e. detect hallucinations). Separate keys are required for each.

You may need an API key to use any of the LLM-associated magic predicates

Ollama does not require a key but OpenAI and SERP do.

The keys shown below are not valid OpenAI API or serpAPI keys. They are used to illustrate where your valid key will go.

To get an OpenAI API key go to the OpenAI website and sign up. To get a serpAPI key, go to https://serpapi.com/.

There are various ways to configure your API key, as a query option prefix or in the AllegroGraph configuration.

The actual openaiApiKey starts with sk- followed by a lot of numbers and letters. The serpApiKey is just a lot of numbers and letters. In the query option prefixes just below, the keys are preceded with franz: and the whole thing is wrapped in angle brackets. Similarly for the query options just below that.

As a query option prefix, write (the keys are not valid, replace with your valid key(s)):

PREFIX franzOption_openaiApiKey: <franz:sk-U01ABc2defGHIJKlmnOpQ3RstvVWxyZABcD4eFG5jiJKlmno>

PREFIX franzOption_serpApiKey: <franz:11111111111120b7c4d3d04a9837e3362edb2733318effec25c447445dfbf594> Syntax for config file which causes the option to be applied to all queries (see the Server Configuration and Control document for information on the config file):

QueryOption openaiApiKey=<franz:sk-U01ABc2defGHIJKlmnOpQ3RstvVWxyZABcD4eFG5jiJKlmno>

QueryOption serpApiKey=<franz:11111111111120b7c4d3d04a9837e3362edb2733318effec25c447445dfbf594> The first openaiApiKey option is applied to each query in the LLM Playground, but not the second serpApiKey.

You can also put the value the file data/settings/default-query-options file:

(("franzOption_openaiApiKey" "<franz:sk-U01ABc2defGHIJKlmnOpQ3RstvVWxyZABcD4eFG5jiJKlmno>"))

(("franzOption_serpApiKey" "<franz:11111111111120b7c4d3d04a9837e3362edb2733318effec25c447445dfbf594sk>"))

Remember your key is valuable (its use may cost money and others with the key can use it at your cost) so protect it.

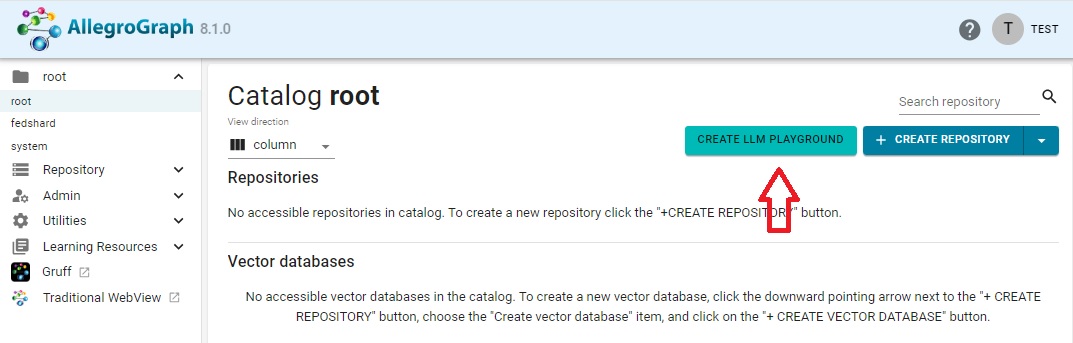

The WebView LLM Playground prompts you for the OpenAI API key at the start of a session. First click on the Create LLM Playground button:

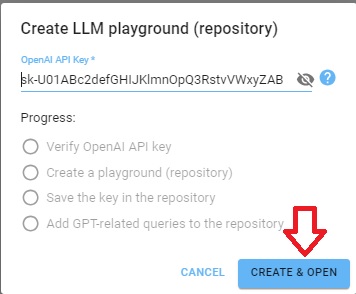

The following dialog comes up. Enter your OpenAI API key as shown (again that key is not actually valid):

Click Create & Open and a repo which automatically adds a PREFIX with the OpenAI API key to every query. (It does not add a PREFIX with the SERPApi API key. You must supply that yourself if you use the askSerp magic predicate. See the serpAPI example.

SPARQL LLM magic predicates (LLMagic)

AllegroGraph LLMagic supports LLM magic predicates (internal functions which act like SPARQL operators). These are listed in the SPARQL Magic Property document under the heading Large Language Models*. (You click on a listed magic property to see additional documentation.) See also LLMagic functions listed here.

For example the http://franz.com/ns/allegrograph/8.0.0/llm/response magic property prompts GPT for a response. For example, we can ask for a list of 5 US states:

PREFIX llm: <http://franz.com/ns/allegrograph/8.0.0/llm/>

SELECT * { ?response llm:response

"List 5 US States.". } which produces the response:

response

"California"

"Texas"

"Florida"

"New York"

"Pennsylvania" Vector databases

A vector database is a database that can store vectors (fixed-length lists of numbers) along with other data items. Vector databases typically implement one or more Approximate Nearest Neighbor (ANN) algorithms, so that one can search the database with a query vector to retrieve the closest matching database records. (Wikipedia)

See the document Vector Databases and Storage for further information and examples using vector databases.

LLM Examples

See LLM Examples. The LLM Split Specification document also contains some examples. The LLM Embed Specification has some vector database examples. The Natural Language Queries/Q&A system/ChatBot document shows a natural language interface for querying LLM databases. Building a Knowledge Graph via an LLM shows building a Chatbot on the AllegroGraph.cloud server. The example can also be run in a local AllegroGraph.