Introduction: About Ollama

Ollama is a platform designed for deploying, managing, and interacting with AI language models locally. It focuses on making it easy for users to run large language models (LLMs) on their own hardware, without needing to rely on cloud services or external servers. The platform's primary advantage is allowing users to operate AI models while maintaining control over their data and reducing latency associated with cloud-based processing.

If you use Ollama, you must provide information about your Ollama setup and set llm query options in the agraph.cfg file. See here in the Server Configuration and Control document for more information.

Not all models are supported as supported models need to support function calling. The models llama3.1, mistral, and qwen2 do provide such support and others models will also work if they support function calling. If you have questions about other models, please contact support@franz.com.

A quick example

Suppose you have a Llama 3.1 server running on machine1 which has been trained. You can then query that server entirely on machine1 (which need not even be connected to the internet) with a query like this:

PREFIX franzOption_llmVendor: <franz:ollama>

PREFIX franzOption_llmScheme: <franz:http>

PREFIX franzOption_llmHost: <franz:127.0.0.1>

PREFIX franzOption_llmPort: <franz:11434>

PREFIX franzOption_llmChatModel: <franz:llama3.1>

PREFIX llm: <http://franz.com/ns/allegrograph/8.0.0/llm/>

SELECT * { ?response llm:response

"List ten classic H.P.Lovecraft books". } The Llama 3.1 server has been trained and will provide a response based on that training. No further internet access is required.

Note the specified query options. Here are links to their documentation:

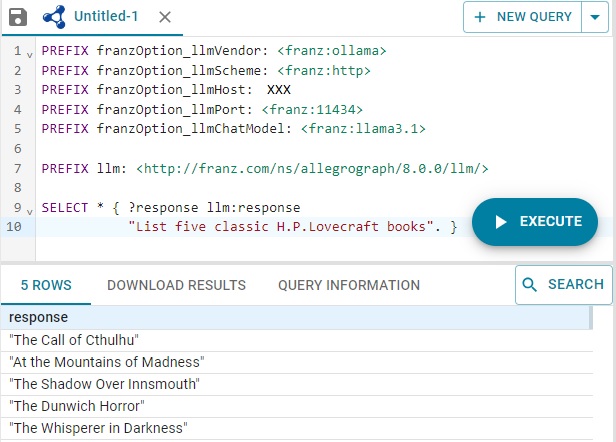

A Simple Example

Here we pose a fairly simple question to an Ollama host (location X'ed out):

Key Features of Ollama

Local Model Execution

Ollama allows users to run advanced AI models like GPT and other LLMs on their local machines. This can be beneficial for privacy, data security, and control over the environment.

Privacy Focus

Since the models are executed locally, no data needs to be sent to the cloud. This can be particularly advantageous for businesses or individuals concerned about the privacy of their interactions or sensitive data.

Pre-Trained Models

The platform supports running pre-trained AI models, meaning users can use these models out of the box for various tasks, such as text generation, summarization, or translation.

Ease of Use

Ollama is designed to be user-friendly, providing simple interfaces for interacting with the models without needing advanced technical knowledge.

Developer Integration

Developers can integrate Ollama with their own applications, making it easier to add language model capabilities without relying on external API calls.

Data Privacy and Control

Since everything runs locally, it can be particularly useful for industries where sensitive data handling and compliance are essential.

Offline Access

The platform allows users to use AI models without needing constant internet access, making it practical in environments where connectivity is limited.