This is the reference guide for AllegroGraph 8.4.1. See the server installation and Lisp Quick Start for an overview. An introduction to AllegroGraph covering all of its many features at a high-level is in the AllegroGraph Introduction.

Symbols and packages

Allegro CL has case-sensitive executables (such as mlisp) and case-insensitive executables (such as alisp). The AllegroGraph fasl files will only work in the case-sensitive mode.

Unless otherwise noted, symbols defined in this document are exported from the db.agraph package (nicknamed triple-store). The db.agraph.user package (nicknamed triple-store-user) is intended to be a convenient package in which to write simple AllegroGraph code. The db.agraph.user package uses the following packages:

- db.agraph

- sparql

- prolog

- excl

- common-lisp

When an application creates its own packages, it is recommended that they use the same packages as db.agraph.user (unless there is some specific name conflict to be avoided). Such application packages could thus be defined with a defpackage form like:

(defpackage :my-agraph-package (:use :db.agraph :sparql :prolog :cl :excl)

[other options]) or these forms could be evaluated after the package is created:

(use-package :db.agraph (find-package :my-agraph-package))

(use-package :sparql (find-package :my-agraph-package))

(use-package :prolog (find-package :my-agraph-package))

(use-package :cl (find-package :my-agraph-package))

(use-package :excl(find-package :my-agraph-package)) Again, if one or more of the packages used by db.agraph.user should be excluded because of actual or potential name conflicts, they should not be used and symbols in them will need to be package qualified in application code. All this is analogous to the common-lisp and common-lisp-user packages. Symbols in this document are not, in general, package-qualified.

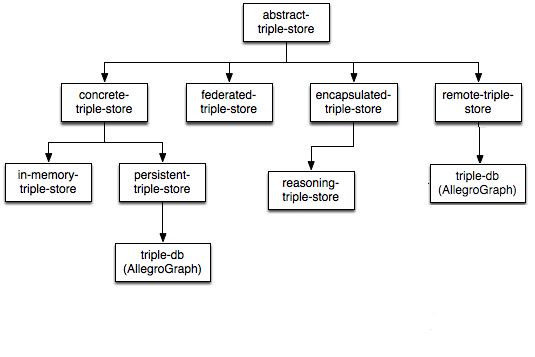

Triple Store Operations

These functions are used to create, delete, and examine triple-stores. For convenience, many operations act by default on the current triple-store which is kept in a special variable named *db*. The with-triple-store macro makes it easy to call other code with a particular store marked current. 1

Operations on a triple-store take place within a transaction. A transaction is started when a triple store is created or opened. A transaction is finished and a new transaction started with commit-triple-store or rollback-triple-store.

The default triple-store instance. API functions that take a db keyword argument will use this value by default.

Note that the *db* value should not be shared among multiple processes because add, rollback and commit events from independent processes will be executed on the same connection with unexpected results. Since *db* is the default value for many functions that have a :db keyword, this problem can easily arise in a multi-process application. To avoid it, each process should make its own connection to the database by using open-triple-store. As of v6.4.0, *db* will be bound to nil in each new thread to help avoid accidental sharing.

The default server port to use when connecting to an AllegroGraph server via HTTP. Functions create-triple-store and open-triple-store use this if no explicit port number is supplied.

The default value is: 10035

For the server-side configuration, see the Port directive in Server Configuration.

List the catalogs on the local AllegroGraph server running on port.

This function is deprecated, please refer to catalog-names instead.

Returns a list of strings, naming catalogs, and containing the value nil if there is a root catalog.

You can use the triple-store-class argument to specify the kind of store the named catalogs should contain. Like open-triple-store and create-triple-store, catalog-names takes additional keyword arguments that vary depending on this class name. For example, querying catalogs containing remote-triple-stores may require specifying a username and password. See open-triple-store or create-triple-store for additional details.

Returns a list of strings, naming triple-stores in a given catalog.

As in catalog-names, you can use the triple-store-class argument to specify the kind of store that should be listed. Argument include-shards-p can be used to include the local shards of the distributed triple stores in the result. Like open-triple-store and create-triple-store, triple-store-names takes additional keyword arguments that vary depending on this class name. For example, querying catalogs containing remote-triple-stores may require specifying a username and password. See open-triple-store or create-triple-store for additional details.

Return a list of property lists each of which describes a catalog on the server.

server- Specifies a server spec or URL of the AG server on which to operate. "127.1:10035" is used ifnilis passed for this argument.:repo-count- if true, return number of repositories for each catalog.

Return a list of property lists each of which describes a repository in the specified catalog.

server- Specifies the server spec or URL of the AG server on which to operate. "127.1:10035" is used ifnilis passed for this argument:catalog- catalog name. The default is the root catalog.:include-shards-p- if true then return the names of the shards of a distributed repo. The names of fedshard shards are always returned.:allp- if true, then all catalogs are considered and the :catalog argument ignored.:check-distributed-p- if true (default), only existing distributed stores will be returned. If false then all defined distributed store names are returned, even those store that haven't been created.:check-fedshard-p- if true (default), only existing fedshard stores will be returned. If false then all defined fedshard store names are returned, even those of store that haven't been created.

Close the triple-store db. Returns nil.

:db- the triple-store to close (defaults to *db*).:if-closed- controls the behavior when thedbprovided is closed, or (without:dbargument) when *db* isnil: Ifif-closedis:errorthen an error will be signaled in this situation. If it is:ignore(the default) thenclose-triple-storewill return without signaling an error.If

:commitis true, and the store is open, then commit-triple-store will be called before the store is closed. This defaults tonil.If

:ensure-not-lingeringis true, then ensure-not-lingering will be called after the store is closed.

If the triple store being closed is the same as *db*, then the new value of *db* depends on its type:

- in case of an encapsulated stream (like a reasoning store),

*db*is afterwards set to the inner store (in a sense unwrapping the encapsulation); - otherwise

*db*is set tonil.

Request the server to immediately close down the resources related to the given repository as soon as there are no more active connections to it. This function returns nothing.

Normally after the last connection to a repository is closed, the server keeps resources (like processes and memory structures) active for the duration specified by InstanceTimeout in Server Configuration. This speeds up a subsequent open-triple-store call if it happens before the timeout.

Call this function to indicate you don't expect the repository to be opened again immediately, or if you wish to save on resources generally.

name- the name of the triple-store:triple-store-class- symbol that indicates the kind of store thatnameis

Like open-triple-store and create-triple-store this function takes additional keyword arguments that vary depending on triple-store-class. For example for a remote-triple-store supplying username and password might be relevant.

See also the :ensure-not-lingering keyword argument to close-triple-store.

Applies outstanding changes to the repository. This ends the current transaction and begins a new one.

The durability, distributed-transaction-timeout, transaction-latency-count, and transaction-latency-timeout arguments relate to multi-master replication. See the Instance Settings section of the Multi-master Replication document for more information on those arguments.

The phase and xid arguments relate to the Two-phase Commit facility.

:db- Specifies the triple store to operate on. Defaults to *db*.:durability- Ifdurabilityis specified, it must be a positive integer, or:min,:max, or:quorum.:distributed-transaction-timeout- Ifdistributed-transaction-timeoutis specified, it must be a non-negative integer.:transaction-latency-count- Iftransaction-latency-countis specified, it must be a non-negative integer.:transaction-latency-timeout- Iftransaction-latency-timeoutis specified, it must be a non-negative integer.:phase- Ifphaseis specified, it must be:prepareor:commit. This is used for two phase commit (2PC) support.:xid- Ifxidis specified, it must be an XA Xid in string format.xidis required whenphaseis specified. Suitable strings are generated by the two-phase commit transaction coordinator.

Copy triples from source to target-db.

copy-triples adds each triple in source to the target-db and ensures that any strings in these triples are also interned in the target-db.

source can be a triple-store, a cursor that yields triples, a list or array of triples, or a single triple. If the source-db can be determined from source (e.g., if source is a triple-store or cursor), then source-db is ignored. Otherwise, the triples are assumed to exist in source-db, which defaults to *db*.

target-db should be an open and writable triple-store.

Create a new repository (also called a triple store). designator can be a string naming a store or a ground store repo spec. The following characters cannot be used in a repository name:

\ / ~ : Space $ { } ( ) < > * + [ ] | create-triple-store takes numerous keyword arguments (and more via &allow-other-keys). The allowed arguments vary depending on the class of triple-store being created. For example, a remote-triple-store requires :server, :port, and :user and :password (unless anonymous access is allowed) whereas an instance of triple-db does not. :user, (See Lisp Quick Start for an example of creating a remote triple store and other examples creating and opening triple stores.)

The possible arguments include (but are not limited to (note some described arguments do not appear in the argument list but are allowed because additional keyword arguments are allowed):

:if-exists- controls what to do if the triple-store already exists. The default value,:supersede, will cause create-triple-store to attempt to delete the old triple store and create a new one. Value:errorcauses create-triple-store to signal an error. If the triple-store is opened by another connection, then:supersedewill fail because the store cannot be deleted.:catalog- the catalog to use in the server's configuration file. If left unspecified, the root catalog will be used. Users may not create repositories in thesystemcatalog.:server- the server for a remote-triple-store. The default is "127.0.0.1" (which is the local machine).:port- the port to use to access the AllegroGraph server. The default is the value of default-ag-http-port:user- the username to use when accessing the server (anonymous access, if supported, does not require a user value):password- the password to use when accessing the server (anonymous access, if supported, does not require a password):scheme- the protocol to use to access the server. May be:httpor:https. The default is:http.:triple-store-class- the kind of triple-store to create. If left unspecified and a repo spec is being used the triple-store class will be automatically selected otherwise triple-db will be used.:expected-size- an integer specifying the expected number of triples in the store. A value outside the range 100,000,000 to 1,000,000,000 will be brought into that range. This overrides the ExpectedStoreSize parameter in agraph.cfg:string-table-size- an integer specifying the number of strings that are expected to be in the string table. A value outside the range of 1024*1024 to 512*1024*1024 will be brought into that range. This overides the value of the StringTableSize parameter in agraph.cfg.:params- If supplied, the value must be an alist of catalog configuration directives and values (both represented as strings). The catalog directives are documented in the Catalog directives section of the Server Configuration and Control document. Any catalog directive can be specified. The catalog defaults will be used for directives that are not specified by this argument. For example, suppose you want the new repository to have a string table size of 128 MB and uselzostring compression (rather than the default, which is no compression), then you would specify the following value(create-triple-store ... :params '(("StringTableSize" . "128m") ("StringTableCompression" . "lzo"))):with-indices- a list of index flavors that this store will use. If left unspecified, the store will use the standard indices:(:gposi :gspoi :ospgi :posgi :psogi :spogi :i).:https-verification- the value should be a list of keywords and value pairs (see below);:proxy,:proxy-host, ... - HTTP proxy specification, only applicable iftriple-store-classisremote-triple-store(see below).

This argument will not work when creating a repository using the remote triple store interface. It only works for repositories created with a direct lisp interface.

The possible keys for :https-verification options are:

:methodallows control over the SSL protocol handshake process; can be::sslv23(the default),:sslv2,:sslv3,:sslv3+,:tlsv1;:certificatenames a file which contains one or more PEM-encoded certificates;:keyshould be a string or pathname naming a file containing the private RSA key corresponding the the public key in the certificate. The file must be in PEM format.:certificate-passwordif specified, should be a string.:verify:nilmeans that no automatic verification will occur,:optionalmeans that the server's certificate (if supplied) will be automatically verified during SSL handshake,:requiredmeans that the server's certificate will be automatically verified during SSL handshake.:maxdepthmust be an integer (which defaults to 10) which indicates the maximum allowable depth of the certificate verification chain;:ca-filespecifies the name of a file containing a series of trusted PEM-encoded Intermediate CA or Root CA certificates that will be used during peer certificate verification;:ca-directoryspecifies the name of a directory containing a series of trusted Intermediate CA or Root CA certificate files that will be used during peer certificate verification;:ciphersshould be a string which specifies an OpenSSL cipher list. These values are passed as arguments to the functionsocket:make-ssl-client-stream.:load-initfile- if true, and if a session on the server is created to open the repository, then re-load the initfile in the session process. This has no effect for a repo opened with the direct lisp interface.:scripts- a list of script file names to load when a session on the server is created to open the repository. This has no effect for a repo opened with the direct lisp interface.

Here is an example:

(create-triple-store "clienttest"

:triple-store-class 'remote-triple-store

:port 10398 :server "localhost" :scheme :https

:https-verification '(;; Server must identify to the client and

;; must pass certificate verification.

;; Other sensible option is :optional; :nil

;; suppresses verification altogether and

;; should never be used.

:verify :required

;; The CA certificate used to sign the server's

;; certificate.

:ca-file "/path/to/ca.cert"

;; The certificate and key file to authenticate

;; this client with.

:certificate "/path/to/test.cert")) The :proxy value must be a string of the form:

[<user>[:<password>]@]<host>[:<port>] that instructs HTTP client to send all requests through the HTTP proxy server at *

All components of the spec can be provided explicitly via :proxy-host, :proxy-port, :proxy-user and :proxy-password parameters, in which case they will override the components extracted from the :proxy string.

Additionally :proxy-bypass-list can provide a list of domain name suffix strings for which to bypass the proxy. It defaults to '("127.0.0.1" "::1" "localhost"). Note that a suffix like "localhost" will also match hosts "xyzlocalhost" and "xyz.localhost").

Examples of proxy settings:

(create-triple-store "clienttest"

:triple-store-class 'remote-triple-store

:proxy "localhost:12345"

:proxy-user "donny"

:proxy-password "iknowuknow")

(create-triple-store

"clienttest"

:triple-store-class 'remote-triple-store

:proxy "donny:iknowuknow@localhost:12345"

:proxy-bypass-list '("internal.example.org")) create-triple-store returns a triple-store object, and sets the value of the variable *db* to that object.

Ensure that the given triple store that is the value of db (default *db*) contains a vector store. If db is already a vector triple store then it will not be modified if supersede is nil. If supersede is true then the existing vector store in db will be completely removed before creating a new vector store in db. Only vector store triples will be deleted, the other triples in the store will remain. The embedder, model and api-key arguments are the same as for create-triple-store.

:db- Specifies the triple store to operate on. Defaults to *db*.embedder- name of the embedding service:model- model to be used in the embedding service:dimensions- length of embedding vector:embedding-api-key- api key, if any, need by the embedding service:supersede- Replace existing vector store (vectors and metadata only) if it exists

The name of the triple-store db (read-only).

This is set when the triple-store is created or opened.

db. This is the graph that will be assigned to any triples added to db unless a different graph is specified.

Delete an existing triple store. Returns t if the deletion was successful and nil if it was not.

The db-or-name argument can be either a triple store instance or the name of a triple store. If it is an instance, then the triple store associated with the instance will be closed if it is open and then it will be deleted (assuming that it is not open by any other processes). If db-or-name is eq to *db*, then *db* will be set to nil once the triple-store is deleted.

The :if-does-not-exist keyword argument specifies what to do if the triple-store does not exist. The default value, :error, causes delete-triple-store to signal an error. The value :ignore will cause delete-triple-store to do nothing and return nil.

You can use the triple-store-class argument to specify the kind of store you are trying to delete. Like open-triple-store and create-triple-store, delete-triple-store takes additional keyword arguments that vary depending on this class. For example, deleting a remote-triple-store may require specifying a username and password. See open-triple-store or create-triple-store for additional details.

Close any current triple-store and create a new empty one.

:apply-reasoner-p- If true (the default), then the new triple-store will use RDFS++ reasoning. If nil, then the triple-store will have no reasoning enabled.

The new triple-store will be bound to *db* and is also returned by make-tutorial-store.

Open an existing repository with the given designator.

The designator can be a string naming a store, an existing triple-store instance, or a repo spec. For example:

;; open the store named 'example-store' in the root catalog

(open-triple-store "example-store")

;; open the store named 'data' in the catalog 'test'

(open-triple-store "test:data")

;; the above form is equivalent to using the

;; :catalog keyword argument:

(open-triple-store "data" :catalog "test")

;; Using a repo spec to open a remote store with username 'user',

;; password 'pass', host 'myhost', port 12345, catalog 'cat',

;; and repository 'repo'

(open-triple-store "user:pass@myhost:12345/cat:repo")

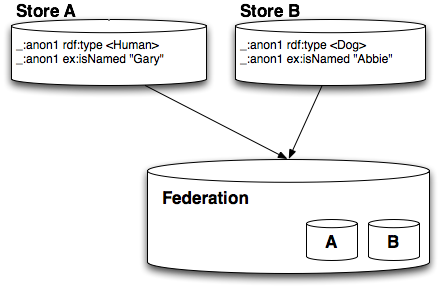

;; create a federation of the triple stores named 'a' and 'b'

(open-triple-store "<a>+<b>") open-triple-store takes numerous keyword arguments. The allowed arguments vary depending on the class of triple-store being opened. For example, a remote-triple-store requires a :server, :user and :password (unless anonymous access is allowed) whereas a local triple-db does not.

For remote-triple-stores, :user, :password, :port, and :server can be specified with the individual arguments.

The possible arguments include (but are not limited to):

:catalog- the catalog to use in the server's configuration file. The catalog may also be specified as part of the requireddesignatorargument, with the catalog name and store name separated by a slash or a colon. If the catalog is not specified as part of thedesignatorand this argument is left unspecified, the root catalog will be used.:server- the server for a remote-triple-store. The default is "127.0.0.1" (which is the local machine).:port- the port to use to find the AllegroGraph server. The default is the value of default-ag-http-port.:user- the username to use when accessing the server (anonymous access, if supported, does not require a user value):password- the password to use when accessing the server (anonymous access, if supported, does not require a password):scheme- the protocol to use to access the server. May be :http or :https. The default is :http.:triple-store-class- the kind of triple-store to open. If left unspecified, thendesignatorwill be treated as a triple-store specification and the triple store class will be determined based on the specification.:read-only- if true, then the triple-store will be opened read-only.:https-verification- See the description of this argument in the documentation for create-triple-store.:proxy- proxy specification forremote-triple-store. Alternatively, proxy settings can be passed via:proxy-host,:proxy-port,:proxy-userand:proxy-passwordparameters. For more details, see the documentation for create-triple-store.:proxy-bypass-list- list of domain name suffix strings for which to bypass the proxy. For more details, see the documentation for create-triple-store.:load-initfile- if true, and if a session on the server is created to open the repository, then re-load the initfile in the session process. This has no effect for a repo opened with the direct lisp interface.:scripts- a list of script file names to load when a session on the server is created to open the repository. This has no effect for a repo opened with the direct lisp interface.:if-does-not-exist- Similarly to the same argument forcl:openfunction, either:error(throw an error if there is no repository matching the spec; default) or:create(create repository given by the spec);nilvalue is not supported. Note that no locking is involved, so the:if-does-not-existoption is not safe for concurrent usage.

Returns a triple store object and sets the value of the variable *db* to that object. If the named triple store is already open, open-triple-store returns a new object connected to the same store.

See Lisp Quick Start for an example of creating and opening triple stores.

Discards any local repository changes, then synchronize to the latest committed repository version on the server. Starts a new transaction.

:db- Specifies the triple store to operate on. Defaults to *db*.:xid- xid, if supplied, must be the xid of a previously prepared commit

Returns the number of triples in a triple store.

Note that reasoning triple-stores do not report accurate triple-counts (doing so might take an inordinate amount of time!). The count returned will be a count of the triples in the ground store.

Note too the count of a multi-master repository may also be inaccurate. See Triple count reports may be inaccurate in the Multi-master Replication document.

:db- Specifies the triple store to operate on. Defaults to *db*.

Returns true if the triple-store with the given name exists in the given catalog (optional).

Returns false if there is no such triple-store.

This function signals an error if communication with the server failed (e.g. due to an invalid value for port, user or password) or if the given catalog does not exist.

You can use the triple-store-class argument to specify the kind of store you are trying to check. Like open-triple-store and create-triple-store, triple-store-exists-p takes additional keyword arguments that vary depending on this class. For example, checking a remote-triple-store may require specifying a username and password. See open-triple-store or create-triple-store for additional details.

Returns the ID of the triple-store. The ID is generated randomly when the triple-store is created. It is an array of four octets.

:db- Specifies the triple store to operate on. Defaults to *db*.

Binds both var and *db* to the triple-store designated by store. The following keyword arguments can also be used:

errorp - controls whether or not

with-triple-storesignals an error if the specified store cannot be found.read-only-p - if specified then with-triple-store will signal an error if the specified triple-store is writable and read-only-p is nil or if the store is read-only and read-only-p is t.

- state - can be :open, :closed or nil. If :open or :closed, an error will be signaled unless the triple-store is in the same state.

Preloads portions of the repository into RAM for faster access.

:db- Specifies the triple store to operate on. Defaults to *db*.:include-strings- If true, string table data will be warmed up.:include-triples- If true, triple indices will be warmed up. The :indices keyword is used to control which indices will be warmed up.:indices-indicesmust be a list of indices to warm up, ornilto warm up all indices.

Evaluate body with var bound to the results of calling open-triple-store on store-arguments. *db* is also bound to the newly opened store. The store will be closed after body executes. store-arguments can be an expression or a list of arguments that will be passed to open-triple-store. Example uses include:

(with-open-triple-store (xyz (ground-triple-store *db*))

...) and

(with-open-triple-store (abc "test" :triple-store-class 'remote-triple-store)

...) Triple Data Operations

Adding Triples

Triples in JSON-LD, N-Quads, N-Triples, Extended N-Quads, RDF/XML, TriG, TriX, and Turtle format can be loaded into the triple-store with the following functions. Note that we recommend using agtool load to import large files.

Triple Loading Functions

The following subsections list functions which load triples from files, streams, URIs, and strings.

Commits while loading triples

All of AllegroGraph triple loading functions take a :commit parameter that controls when the data being loaded is actually committed. This parameter can be:

nil- never commit,t- commit once all of the data has been imported,- a whole number - commit whenever more than this number of triples have been added, and commit at the end of the operation.

Loading Triples

Add triples from source (in N-Triples format) to the triple store.

Returns (as multiple values) the count of triples loaded and the UPI of the graph into which the triples were loaded.

source- can be a stream, a pathname to an N-Triples file, a file URI, an HTTP URI or a string that can be coerced into a pathname.sourcecan also be a list of any of these things. In this case, each item in the list will be imported in turn. When adding statements to a remote-triple-store, a URI source argument will be fetched directly by the server. All other source types are retrieved and delivered to the server via the client interface.In the case where a list of sources is being loaded and the

graphargument is:source, each source in the list will be associated with the URI specifying that source, and that will be used as the graph of the triples loaded from that source. In this case, the second return value will benil.:db- specifies the triple-store into which triples will be loaded. This defaults to the value of *db*.:graph- the graph into which the triples fromsourcewill be placed. It defaults tonilwhich is interpreted asdb's default graph. If supplied, it can be:a string representing a URIref, or a UPI or future-part encoding a URIref, which adds the triples in

sourceto a graph named by that URIthe keyword

:source, in which case thesourceargument will be added as a URI and the loaded triples added to a graph named by that URI. This has the effect of associating the file or URL ofsourcewith the new triples.a list of the values described above.

:override-graph- if true andgraphis a list, add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.

The following keyword parameters can be used to control the loading process:

:verbose- specifies whether or not progress information is printed as triples are loaded. It defaults tonil.:commit- controls whether and how often commit-triple-store is called while loading. This can benilfor no commit,tfor commit at the end of the load or a whole number to commit whenever more than that number of triples have been added and again at the end of the load. Default is nil.:parse-only- if true then parse the input but don't add anything to the repository.:relax-syntax- For N-Triples and N-Quad files, this flag tells AllegroGraph to be more liberal than standard RDF by accepting:- literals in the Graph position (for N-Quads), and

- IRIs that do not include a colon (:).

:default-attributes- specifies the attributes that will be associated with every triple being loaded. (Because in this file format attributes cannot be specified for individual triples, all triples loaded will have these attributes.) See the Triple Attributes document for more information on attributes.:continue-on-error-p- determine how the parser should behave if it encounters an error parsing or adding a triple. It can be one of:nil- meaning to raise the error normally,t- meaning to ignore the error and continue parsing with the next line in the source,a function of four arguments: a parsing helper data structure, the line in the source where the problem was encountered, the condition that caused the error and the arguments (if any) to that condition.

You can use continue-on-error-p to print all of the problem lines in a file using something like

(load-ntriples

"source"

:continue-on-errorp

(lambda (helper source-line condition &rest args)

(declare (ignore helper args))

(format t "~&Error at line ~:6d - ~a" source-line condition)))

Add the triples in string (in N-Triples format) to the triple store.

See load-ntriples for details on the parameters.

Add triples from the named Turtle-star source to the triple-store. The additional arguments are:

:db- specifies the triple-store into which triples will be loaded; defaults to the value of db.:base-uri- this defaults to the name of the file from which the triples are loaded. It is used to resolve relative URI references during parsing. To use no base-uri, use the empty string "".:graph- the graph to which the triples fromsourcewill be placed. It defaults tonilwhich is interpreted asdb's default graph. If supplied, it can be:a string representing a URIref, or a UPI or future-part encoding a URIref, which adds the triples in

sourceto a graph named by that URIthe keyword

:source, in which case thesourceargument will be added as a URI and the loaded triples added to a graph named by that URI. This has the effect of associating the file or URL ofsourcewith the new triples.a list of the values described above.

:override-graph- if true andgraphis a list, add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.:default-attributes- specifies the attributes that will be associated with every triple being loaded. (Because in this file format attributes cannot be specified for individual triples, all triples loaded will have these attributes.) See the Triple Attributes document for more information on attributes.

Treat string as a Turtle-star data source and add it to the triple-store.

See load-turtle for details.

Add triples from an RDF/XML file to a triple-store. The arguments are (some do not appear in the argument list but are accepted because &allow-other-keys is specified):

source: a string or pathname identifying an RDF/XML file, or a stream.:db- specifies the triple-store into which triples will be loaded; defaults to the value of *db*.:base-uri- this defaults to the name of the file from which the triples are loaded. It is used to resolve relative URI references during parsing. To use no base-uri, use the empty string "".:graph- the graph to which the triples fromsourcewill be placed. It defaults tonilwhich is interpreted asdb's default graph. If supplied, it can be:a string representing a URIref, or a UPI or future-part encoding a URIref, which adds the triples in

sourceto a graph named by that URIthe keyword

:source, in which case thesourceargument will be added as a URI and the loaded triples added to a graph named by that URI. This has the effect of associating the file or URL ofsourcewith the new triples.a list of the values described above.

:override-graph- if true andgraphis a list, add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.:resolve-external-references- if true, the external references insourcewill be followed. The default isnil.

Treat string as an RDF/XML data source and add it to the triple-store. For example:

(load-rdf/xml-from-string

"<?xml version=\"1.0\"?>

<rdf:RDF xmlns:rdf=\"http://www.w3.org/1999/02/22-rdf-syntax-ns#\"

xmlns:ex=\"http://example.org/stuff/1.0/\">

<rdf:Description rdf:about=\"http://example.org/item01\">

<ex:prop rdf:parseType=\"Literal\"

xmlns:a=\"http://example.org/a#\"><a:Box required=\"true\">

<a:widget size=\"10\" />

<a:grommit id=\"23\" /></a:Box>

</ex:prop>

</rdf:Description>

</rdf:RDF>

") See load-rdf/xml for details on the parser and the other arguments to this function.

Loading Quads

These functions load quads (triples with a graph optionally specified). Formats which support graphs include N-Quad, TriX, and TriG.

Add triples from source (in N-Quads format) to the triple store.

Returns (as multiple values) the count of triples loaded and the UPI of the graph into which quads missing the graph field were loaded. (load-nquads is following load-ntriples here). If all nquads have a graph field specified, the second return value has no meaning, but the graph field is optional in nquads so some (or all) may be missing and the second return value is then relevant. See load-ntriples for more details on the second return value, particularly when the value of the graph argument is :source and some nquads do not have a graph specified.

source- can be a stream, a pathname to an N-Quads file, a file URI, an HTTP URI or a string that can be coerced into a pathname.sourcecan also be a list of any of these things. In this case, each item in the list will be imported in turn. When adding statements to a remote-triple-store, a URI source argument will be fetched directly by the server. All other source types are retrieved and delivered to the server via the client interface.In the case where a list of sources is being loaded and the

graphargument is:source, each source in the list will be associated with the URI specifying that source, which will be used as the graph for nquads which do not have the graph specified. In this case, the second return value will benil.:db- specifies the triple-store into which nquads will be loaded. This defaults to the value of *db*.:graph- for any nquads which do not have a graph specified (the graph is optional in a nquad), this argument specifies the graph to which they are loaded. The argument defaults tonilwhich is interpreted asdb's default graph. This argument does not affect nquads which do have a graph specified. If supplied, it can be:a string representing a URIref, or a UPI or future-part encoding a URIref, which adds the triples in

sourceto a graph named by that URIthe keyword

:source, in which case thesourceargument will be added as a URI and the loaded nquads which do not have the graph specified will be added to a graph named by that URI. This has the effect of associating the file or URL ofsourcewith the incoming graphless nquads.a list of the values described above.

:override-graph- if true, replace existing graph information withgraph. Ifgraphis a list,override-graphmust be true to add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.

The following keyword parameters can be used to control the loading process:

:verbose- specifies whether or not progress information is printed as triples are loaded. It defaults tonil.:commit- controls whether and how often commit-triple-store is called while loading. This can benilfor no commit,tfor commit at the end of the load or a whole number to commit whenever more than that number of triples have been added and again at the end of the load. Default is nil.:parse-only- if true then parse the input but don't add anything to the repository.:relax-syntax- For N-Triples and N-Quad files, this flag tells AllegroGraph to be more liberal than standard RDF by accepting:- literals in the Graph position (for N-Quads), and

- IRIs that do not include a colon (:).

:default-attributes- specifies the attributes that will be associated with every triple being loaded. (Because in this file format attributes cannot be specified for individual triples, all triples loaded will have these attributes.) See the Triple Attributes document for more information on attributes.:continue-on-error-p- determine how the parser should behave if it encounters an error parsing or adding a triple. It can be one of:nil- meaning to raise the error normally,t- meaning to ignore the error and continue parsing with the next line in the source,a function of four arguments: a parsing helper data structure, the line in the source where the problem was encountered, the condition that caused the error and the arguments (if any) to that condition.

You can use continue-on-error-p to print all of the problem lines in a file using something like

(load-nquads

"source"

:continue-on-errorp

(lambda (helper source-line condition &rest args)

(declare (ignore helper args))

(format t "~&Error at line ~:6d - ~a" source-line condition)))

Add the triples in string (in N-Quads format) to the triple store.

Returns (as multiple values) the count of nquads loaded and the UPI of the graph into which the quads were loaded (for those quads which do not have the graph specified).

See load-nquads for details on the parameters.

Load a TriX document into the triple store named by db.

source- a string or pathname identifying a file, or a stream.:db- specifies the triple-store into which triples will be loaded; defaults to the value of *db*.:verbose- if true, information about the load progress will be printed to*standard-output*.:graph- a future-part or UPI that identifies a graph, ornil. If it is non-null, any graphs in the TriX document that are equal to the given URI will be treated as the default graph indb.:override-graph- if true, replace existing graph information withgraph. Ifgraphis a list,override-graphmust be true to add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.:commit- controls whether and how often commit-triple-store is called while loading. This can benilfor no commit,tfor commit at the end of the load or a whole number to commit whenever more than this number of triples have been added. Default is nil.:default-attributes- specifies the attributes that will be associated with every triple being loaded. (Because in this file format attributes cannot be specified for individual triples, all triples loaded will have these attributes.) See the Triple Attributes document for more information on attributes.

graph can also be the symbol :source, in which case namestring is called on the source and treated as a URI, or a list of the values described above.

We have implemented a few extensions to TriX to allow it to represent richer data:

Graphs can be named by

<id>, not just<uri>. (A future revision might also permit literals.)The default graph can be denoted in two ways: by providing

<default/>as the name for the graph; or by providing a graph URI as an argument toload-trix.- In the interest of generality, the predicate position of a triple is not privileged: it can be a literal or blank node (just like the subject and object), not just a URI.

Load a string containing data in TriX format into db

string- a string containing TriX data:db- specifies the triple-store into which triples will be loaded; defaults to the value of *db*.:verbose- if true, information about the load progress will be printed to*standard-output*.:graph- a future-part or UPI that identifies a graph,nilor a list of such values. If it is non-null, any graphs in the TriX document that are equal to the given URI will be treated as the default graph indb.:override-graph- if true, replace existing graph information withgraph. Ifgraphis a list,override-graphmust be true to add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.:commit- controls whether and how often commit-triple-store is called while loading. This can benilfor no commit,tfor commit at the end of the load or a whole number to commit whenever more than this number of triples have been added.:default-attributes- specifies the attributes that will be associated with every triple being loaded. (Because in this file format attributes cannot be specified for individual triples, all triples loaded will have these attributes.) See the Triple Attributes document for more information on attributes.

See load-trix for more details.

Add triples from the TriG source to the triple-store. source can be a filename or a stream.

The additional arguments are:

:db- specifies the triple-store into which triples will be loaded; defaults to the value of db.:base-uri- this defaults to the name of the file from which the triples are loaded. It is used to resolve relative URI references during parsing. To use no base-uri, use the empty string "".:graph- the graph into which the triples fromsourcewill be placed. It defaults tonilwhich is interpreted asdb's default graph. If supplied, it can be:a string representing a URIref, or a UPI or future-part encoding a URIref, which adds the triples in

sourceto a graph named by that URIthe keyword

:source, in which case thesourceargument will be added as a URI and the loaded triples added to a graph named by that URI. This has the effect of associating the file or URL ofsourcewith the new triples.a list of the values described above.

:override-graph- if true, replace existing graph information withgraph. Ifgraphis a list,override-graphmust be true to add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.:commit- controls whether and how often commit-triple-store is called while loading. This can benilfor no commit,tfor commit at the end of the load or a whole number to commit whenever more than that number of triples have been added and again at the end of the load.:default-attributes- specifies the attributes that will be associated with every triple being loaded. (Because in this file format attributes cannot be specified for individual triples, all triples loaded will have these attributes.) See the Triple Attributes document for more information on attributes.

Loading Quads with Attributes

NQX format is similar to N-Quad format except each quad may in addition have attributes specified.

Add triples from source in NQX format to the repository. NQX format is N-Quad format plus optional attributes for each triple. Returns (as multiple values) the count of triples loaded and the UPI of the graph into which the triples were loaded.

source- can be a stream, a pathname to an NQX file, a file URI, an HTTP URI or a string that can be coerced into a pathname.sourcecan also be a list of any of these things. In this case, each item in the list will be imported in turn. When adding statements to a remote-triple-store, a URIsourceargument will be fetched directly by the server. All othersourcetypes are retrieved and delivered to the server via the client interface.In the case where a list of sources is being loaded and the

graphargument is:source, each source in the list will be associated with the URI specifying that source, and that will be used as the graph of the triples loaded from that source. In this case, the second return value will benil.:db- specifies the repository into which triples will be loaded. This defaults to the value of *db*.:graph- the graph to which the triples fromsourcewill be placed. It defaults tonilwhich is interpreted asdb's default graph. If supplied, it can be:a string representing a URIref, or a UPI or future-part encoding a URIref, which adds the triples in

sourceto a graph named by that URIthe keyword

:source, in which case thesourceargument will be added as a URI and the loaded triples added to a graph named by that URI. This has the effect of associating the file or URL ofsourcewith the new triples.a list of the values described above.

:override-graph- if true, replace existing graph information withgraph. Ifgraphis a list,override-graphmust be true to add the triples to all graphs in it, otherwise all values in the list except the first one will be ignored.

The following keyword parameters can be used to control the loading process:

:verbose- specifies whether or not progress information is printed as triples are loaded. It defaults tonil.:commit- controls whether and how often commit-triple-store is called while loading. This can benilfor no commit,tfor commit at the end of the load or a whole number to commit whenever more than that number of triples have been added and again at the end of the load. Default is nil.:parse-only- if true then parse the input but don't add anything to the repository.:relax-syntax- For N-Triples and N-Quad files, this flag tells AllegroGraph to be more liberal than standard RDF by accepting:- literals in the Graph position (for N-Quads), and

- IRIs that do not include a colon (:).

:default-attributes- specifies the attributes that will be associated with every triple that does not have attributes specified in the file. See the Triple Attributes document for more information on attributes.:continue-on-error-p- determine how the parser should behave if it encounters an error parsing or adding a triple. It can be one of:nil- meaning to raise the error normally,t- meaning to ignore the error and continue parsing with the next line in the source,a function of four arguments: a parsing helper data structure, the line in the source where the problem was encountered, the condition that caused the error and the arguments (if any) to that condition.

You can use continue-on-error-p to print all of the problem lines in a file using something like

(load-nqx

"source"

:continue-on-errorp

(lambda (helper source-line condition &rest args)

(declare (ignore helper args))

(format t "~&Error at line ~:6d - ~a" source-line condition)))

Add the triples in string (in NQX format) to the triple store.

See load-nqx for details on the parameters.

Loading JSON-LD

Parse and load JSON-LD source to the triple store. Returns the number of triples added. Additional arguments are:

:db- specifies the triple-store into which triples will be loaded; defaults to the value of db.:commit(positive integer) - when provided, commit triple-store after eachcommittriples added and at the end of the operation.:context(string) - when provided it is loaded first and used as top-level context.:graphand:default-attributes- usual arguments for any load function.:base-uri- when provided it is used as default @base property for the document.:resolve-external-references- when true, contexts referenced by URIs are also downloaded and processed. When false (default) an error is signalled when they are encountered.:external-reference-timeout- specifies the timeout for the HTTP request to fetch the context file.

Treat string as a JSON-LD source and load it into the triple store.

See load-json-ld for details.

Loading other data formats

If you need to load a format that AllegroGraph does not yet support, we suggest you use the excellent free tool rapper from http://librdf.org/raptor/rapper.html to create an N-Triples file that AllegroGraph can load. This format is the most efficient for loading large amounts of data. See the AllegroGraph and rapper page for more information.

Checking that UPIs are in the string table

AllegroGraph ensures that the UPIs in a triple being added are already interned in the triple-store's string table. Unless the UPI is in the string table, associated triples can not be serialized. This check does somewhat slow down adding triples. You can suppress the check by wrapping the code adding the triples in the following macro:

Execute body without validating that hashed UPIs are present in the string table.

This effects only db which defaults to *db*.

For example, this code will signal an error:

(add-triple !rdf:x !rdf:y (let ((*db* nil)) (upi !rdf:notHere))) because !rdf:notHere is not in the string-table. This code, however, will add the triple regardless:

(while-not-validating-that-upis-are-in-string-table ()

(add-triple !rdf:x !rdf:y (let ((*db* nil)) (upi !rdf:notHere)))) Note that adds wrapped in this macro are slightly faster but more dangerous since it may be impossible to re-serialize the data if it contains hashed UPIs that are not interned in the string table.

Manually Adding Triples

You can also add triples to a triple-store manually with the add-triple function. The three required arguments, representing the subject, predicate, and object of the triple to be added can be expressed either as:

strings in the N-Triples syntax for URI references and literals;

UPIs such as those returned by the functions intern-resource, intern-literal, new-blank-node, and value->upi;

future-parts such as those created using the !-reader or returned by resource and literal.

Add a triple to the db

The added triple will have the given subject, predicate and object.

:db- the triple-store into which to add the triple; this defaults to *db*.:g- the graph for the new triple. If not specified, then the triple will be added to the default-graph (see default-graph-upi).:attributes- if supplied, this must be an alist of name and value pairs. Each name and value must be a string. Each name must refer to a previously defined attribute. An error will be signaled if any name refers to an undefined attribute or if any value does not conform to the named attribute's constraints.

add-triple returns the triple-id of the new triple.

Anatomy of a Triple

Triple parts: Resources, Literals, UPIs and more

Each triple has five parts (!), a subject, a predicate, an object, a graph and a (unique, AllegroGraph assigned) ID. In RDF, the subject must be a "resource", i.e., a URI or a blank node. The predicate must be a URI. The object may be a URI, a blank node or a "literal". Literals are represented as strings with an optional type indicated by a URI or with a (human) language tag such as en or jp.

2 Blank nodes are anonymous parts whose identity is only meaningful within a given triple-store.

Resources, literals and blank nodes are represented as strings in RDF/XML or N-Triple syntax. AllegroGraph stores these strings in a string dictionary and hashes them to compute a Unique Part Identifier (UPI) for each string. A UPI is a length 12 octet array. One byte of the array is used to identify its type (e.g., is it a resource, a literal, or a blank node). The other 11-bytes are used to either store a hash of the string or to store an encoding of the UPIs contents (see type mapping below for more information about encoded UPIs).

From Strings to Parts

Resources and literals can be denoted with plain Lisp strings in the syntax used in N-Triples files. However this isn't entirely convenient since the N-Triples syntax for literals requires quotation marks which then need to be escaped when writing a Lisp string. For instance the literal whose value is "foo" must be written in N-Triples syntax as "\"foo\"". Similarly -- though not quite as irksome -- URIs must be written enclosed in angle brackets. The string "http://www.franz.com/simple#lastName", passed as an argument to add-triple will be interpreted as a literal, not as the resource indicated by the URI. To refer to the resource in N-Triples syntax you must write "<http://www.franz.com/simple#lastName>". Literals with datatypes or language codes are even more cumbersome to write as strings, requiring both escaped quotation marks and other syntax.

To make it easier to produce correctly formatted N-Triple strings we provide two functions resource and literal. (The ! reader macro, discussed below, can also be used to produce future-parts and UPIs suitable to use as arguments for most of AllegroGraph's API.):

Create a new future-part with the provided values.

string- the string out of which to create the part.:language- If provided,languageshould be a valid RDF language tag.:datatype- If provided, thedatatypemust be a resource. I.e., it can be a string representation of a URI (e.g., "http://foo.com/") or a future-part specifying a resource (e.g., !<http://foo.com>). If it does not specify a resource, a condition of type invalid-datatype-for-literal-error is signaled. An overview of RDF datatypes can be found in the W3C's RDF concepts guide.

Only one of datatype and language can be used at any one time. If both are supplied, a condition of type datatype-and-language-specified-error will be signaled.

Return the provided string as a future-part naming a resource.

If namespace is provided, then string will be treated as a fragment and the future-part returned will be the URIref whose prefix is the string to which namespace maps and whose fragment is string. I.e., if the namespace prefix rdf maps to <http://www.w3.org/1999/02/22-rdf-syntax-ns#>, then the parts created by

(resource "Car" "rdf") and

(resource "http://www.w3.org/1999/02/22-rdf-syntax-ns#Car") will be the same.

Some examples (we will describe and explain the ! notation below):

> (resource "http://www.franz.com/")

!<http://www.franz.com/>

> (literal "Peter")

!"Peter"

> (literal "10" :datatype

"http://www.example.com/datatypes#Integer")

!"10"^^<http://www.example.com/datatypes#Integer>

> (literal "Lisp" :language "EN")

!"Lisp"@en Another issue with using Lisp strings to denote literals and resources is that the strings must, at some point, be translated to the UPIs used internally by the triple-store. This means that if you are going to add a large number of triples containing the same resource or literal and you pass the resource or literal value as a string, add-triple will have to repeatedly convert the string into its UPI.

To prevent this repeated computation, you can use functions like intern-resource or intern-literal to compute the UPI of a string outside of the add-triple loop. The function new-blank-node (or the macro with-blank-nodes) can be used to produce the UPI of a new anonymous node for use as the subject or object of a triple. You can also use encoded ids in place of blank nodes. See Encoded ids for more information

Returns true if upi is a blank node and nil otherwise. For example:

> (blank-node-p (new-blank-node))

t

> (blank-node-p (literal "hello"))

nil Compute the UPI of uri, make sure that it is stored in the string dictionary, and return the UPI.

uri- can be a string, future-part or uri.:db- specifies the triple-store into whichuriwill be interned. This defaults to the value of *db*.:upi- if supplied, then thisupiwill be used to store theuri's UPI; otherwise, a new UPI will be created using make-upi.:namespace- Ifnamespaceis provided, thenuriwill be treated as a fragment and the UPI returned will encode the URIref whose prefix is the string to whichnamespacemaps and whose fragment isuri.

Interned strings are guaranteed to be persistent as long as both of the following are true:

commit-triple-store is called

At least one live triple references the UPI.

See also resource.

Compute the UPI of value treating it as an untyped literal, possibly with a language tag. Ensure that the literal is in the store's string dictionary and return the UPI.

:db- specifies the triple-store into which theuriwill be interned. This defaults to the value of *db*.:upi- if supplied, then thisupiwill be used to store theuri's UPI; otherwise, a new UPI will be created using make-upi.:language- if supplied, then this language will be associated with the literalvalue. See rfc-3066 for details on language tags.:datatype- If supplied, thedatatypemust be a resource. I.e., it can be a string representation of a URI (e.g., "http://foo.com/") or a future-part specifying a resource (e.g., !<http://foo.com>). If it does not specify a resource, a condition of type invalid-datatype-for-literal-error is signaled. An overview of RDF datatypes can be found in the W3C's RDF concepts guide.

Only one of datatype and language can be used at any one time. If both are supplied, a condition of type datatype-and-language-specified-error will be signaled.

Interned strings are guaranteed to be persistent as long as both of the following are true:

commit-triple-store is called

- At least one live triple references the UPI.

db and return the UPI. If a upi is not passed in with the :upi parameter, then a new UPI structure will be created. db defaults to *db*.

This convenience macro binds one or more variables to new blank nodes within the body of the form. For example:

(with-blank-nodes (b1 b2)

(add-triple b1 !rdf:type !ex:Person)

(add-triple b1 !ex:firstName "Gary")

(add-triple b2 !rdf:type !ex:Dog)

(add-triple b2 !ex:firstName "Abbey")

(add-triple b2 !ex:petOf b1)) The following example demonstrates the use of these functions. We use intern-resource to avoid repeatedly translating the URIs used as predicates into UPIs and then use new-blank-node to create a blank node representing each employee and intern-literal to translate the strings in the list employee-data into UPIs. (We could also use literal to convert the strings but using intern-literal is more efficient.)

(defun add-employees (company employee-data)

(let ((first-name (intern-resource "http://www.franz.com/simple#firstName"))

(last-name (intern-resource "http://www.franz.com/simple#lastName"))

(salary (intern-resource "http://www.franz.com/simple#salary"))

(employs (intern-resource "http://www.franz.com/simple#employs"))

(employed-by (intern-resource "http://www.franz.com/simple#employed-by")))

(loop for (first last sal) in employee-data do

(let ((employee (new-blank-node)))

(add-triple company employs employee)

(add-triple employee employed-by company)

(add-triple employee first-name (intern-literal first))

(add-triple employee last-name (intern-literal last))

(add-triple

employee salary

(intern-literal sal :datatype "http://www.franz.com/types#dollars")))))) Note that the difference between resource and intern-resource is that the former only computes the future-part of a string whereas the latter both does this computation and ensures that the string and its UPI are present in the triple-store's string dictionary. intern-resource requires that a database be open while resource does not.

Encoded ids

Encoded ids, described in detail in Encoded ids, are analogous to blank nodes but have certain advantages, such as they can be located using their URI rather than through another node that connects to them (you can only get a handle on a blank node by finding another node which points to it).

Sets up an encoding for prefix, which must be a string, using format, which should be a template (also a string) indicating with brackets, braces, etc. the allowable suffixes. The characters + and * are not allowed in the template string (so, e.g., the string "[0-9]+" is illegal and will fail).

Returns two values: the index of this encoding (a positive integer) and t or nil. t is returned if the encoded-id definition is registered or if the template is modified. nil is returned if the prefix is already registered and the template is unchanged. (This allows the same registration form to be evaluated more than once.) You can redefine the template by calling register-encoded-id-prefix with the same prefix and the new template. But an error will be signaled if you do that after triples have been created using an encoded-id from that prefix.

The number of distinct strings that the template may match must be less than or equal to 2^60. If the intention is to use next-encoded-upi-for-prefix in a multimaster replication cluster (see Multimaster replication), then the pattern must match 2^60 strings and we recommend using the "plain" format to achieve this goal. The value "plain" will produce integer values between 0 (inclusive) and 2^60 (exclusive), thus fulfilling the requirement. Note that the size of the range may change in later releases. Any such change will be noted in the Release Notes.

When not used for those purposes, templates that generate fewer strings are permitted.

See Encoded IDs for further information.

Examples:

;; These first examples use templates that will cause calls

;; to next-encoded-upi-for-prefix to fail if the repository is

;; or becomes a multi-master cluster instance.

(register-encoded-id-prefix

"http://www.franz.com/managers"

"[0-9]{3}-[a-z]{3}-[0-9]{2}") ;; not a suitable value for

;; calls to next-encoded-upi-for-prefix

;; in a multi-master cluster instance

RETURNS

3 ;; you will likely see a different value

t

;; If you run the same form again:

(register-encoded-id-prefix

"http://www.franz.com/managers"

"[0-9]{3}-[a-z]{3}-[0-9]{2}") ;; not a suitable value for

;; calls to next-encoded-upi-for-prefix

;; in a multi-master cluster instance

RETURNS

3 ;; same value as before

nil ;; meaning no action was taken

;; If you modify the template (you can only do this when no stored

;; triple uses en encoded-id from the prefix):

(register-encoded-id-prefix

"http://www.franz.com/managers"

"[0-9]{3}-[a-z]{3}-[0-9]{4}") ;; last value is 4 rather than 2

;; not a suitable value for

;; calls to next-encoded-upi-for-prefix

;; in a multi-master cluster instance

RETURNS

3 ;; same value as before

t ;; meaning made the change

;; These examples will allow calls to next-encoded-upi-for-prefix

;; in multi-master cluster instances since the templates

;; generate 2^60 distinct strings.

(register-encoded-id-prefix

"http://www.franz.com/managers"

"plain") ;; Calls to next-encoded-upi-for-prefix

;; will work in multi-master cluster instances

RETURNS

3 ;; you will likely see a different value

t

;; Another example that has the correct range, since the string

;; has 15 elements and 16 choices for each element resulting in

;; (2^4)^15 = 2^60 possibilities.

(register-encoded-id-prefix

"http://www.franz.com/managers"

"[a-p]{15}") ;; Calls to next-encoded-upi-for-prefix

;; will work in multi-master cluster instances

RETURNS

4 ;; you will likely see a different value Returns upi after modifying it to be the next id for the specified encoded-id prefix. The system maintains an internal order of encoded-ids for a prefix. (This order may not be the obvious one, but it will run over all possible ids for a prefix.) upi should be a upi and will be modified. If you do not want to modify an existing upi, create a new one with (make-upi). Do not use this function if you have created an id for prefix directly using the @@ encoding as doing so may create duplicate ids (which you may think are distinct).

db defaults to *db*.

Example:

triple-store-user(59): (register-encoded-id-prefix

"http://www.franz.com/department"

"[0-9]{4}-[a-z]{3}-[0-9]{2}")

5

t

triple-store-user(60): (setq d1

(next-encoded-upi-for-prefix

"http://www.franz.com/department"

(make-upi)))

{http://www.franz.com/department@@0000-aaa-00}

triple-store-user(61): (setq bu (make-upi))

#(49 2 238 3 16 0 0 0 161 210 ...)

triple-store-user(62): (setq d2

(next-encoded-upi-for-prefix

"http://www.franz.com/department" bu))

{http://www.franz.com/department@@0000-aaa-01}

triple-store-user(63): bu ;; bu is modified

{http://www.franz.com/department@@0000-aaa-01}

triple-store-user(64): d2

{http://www.franz.com/department@@0000-aaa-01}

triple-store-user(65): (setq d3

(next-encoded-upi-for-prefix

"http://www.franz.com/department" bu))

{http://www.franz.com/department@@0000-aaa-02}

triple-store-user(66): bu ;; bu is modified

{http://www.franz.com/department@@0000-aaa-02}

triple-store-user(67): d2 ;; so is d2.

{http://www.franz.com/department@@0000-aaa-02} Gets the list of encoded ids (as returned by collect-encoded-id-prefixes) and applies fn to each element of that list. Since each list element is itself a list of three elements, fn must be or name a function that accepts three arguments.

Example:

(defun foo (&rest args)

(dolist (i args)

(print i)))

(map-encoded-id-prefixes 'foo)

"http://www.franz.com/employees"

"[0-9]{3}-[a-z]{3}-[0-9]{2}"

2

"http://www.franz.com/managers"

"[0-9]{4}-[a-z]{3}-[0-9]{2}"

3

nil Working with Triples

The functions subject, predicate, object, graph, and triple-id provide access to the part UPIs of triples returned by the cursor functions cursor-row and cursor-next-row or collected by get-triples-list.

Get the graph UPI of a triple.

Use (setf graph) to change the UPI. If the upi argument is supplied, then the triple's graph will be copied into it. If it is not supplied then a new UPI will be created.

Get the object UPI of a triple.

Use (setf object) to change the UPI. If the upi argument is supplied, then the triple's object will be copied into it. If it is not supplied then a new UPI will be created.

Get the predicate UPI of a triple.

Use (setf predicate) to change the UPI. If the upi argument is supplied, then the triple's predicate will be copied into it. If it is not supplied then a new UPI will be created.

Get the subject UPI of a triple.

Use (setf subject) to change the UPI. If the upi argument is supplied, then the triple's subject will be copied into it. If it is not supplied then a new UPI will be created.

triple-id of triple

Working with UPIs

You can also create UPIs and triples programmatically; determine the type-code of a UPI, and compare them. You may want to make your own copy of a triple or UPI when using a cursor since AllegroGraph does not save triples automatically (see, e.g., iterate-cursor for more details).

If using encoded UPIs, then the functions upi->value and value->upi will be essential. upi->number will return the number associated with UPIs with a numeric component. You can also check the type of a UPI with either upi-type-code or upi-typep (see type-code->type-name and its inverse type-name->type-code for additional information.)

Copy a triple.

triple- the triple to copynew- (optional) If supplied, then this must be a triple and the copy oftriplewill be placed into it. Otherwise, a new triple will be created.

This function is useful if you want to keep a reference to a triple obtained from a cursor returned by query functions such as get-triples since the cursor reuses the triple data structure for efficiency reasons.

Create a copy of a UPI.

upi- the UPI to copy.

triple1 and triple2 and returns true if they have the same contents and triple-id and false otherwise. See triple-spog= if you need to compare triples regardless of their IDs.

Decodes UPI and returns the value, the type-code and any extra information as multiple values.

upi- the UPI to decode:db- specifies the triple-store from which the UPI originates.:complete?- controls whether the returned value is required to be a complete representation of the UPI. Currently it only affects dates and date-times for which the non-complete representation is a rational while the complete representation is a cons whose car is the same rational and whose cdr is a generalized boolean that's true if and only if the date or date-time has a timezone specified. Complete representations round-trip in the sense of:(upi= (value->upi (upi->value upi :complete? t) (upi-type-code upi)) upi).

The value, type-code, and extra information are interpreted as follows:

value - a string, rational, float, symbol, or cons representing the contents of the UPI. UPIs representing numeric types are converted into an object of the appropriate lisp type (integer, rational or float). Dates and date-times are by default converted into a single integer or rational respectively, standing for the number of seconds since January 1, 1900 GMT. See the

:complete?parameter on how to recover the timezone information. For a default graph upi, value is :default-graph. For a blank node, value is the blank node's integer representation (each unique blank node in a store gets a different number). Everything else is converted to a string.type-code - an integer corresponding to one of the defined UPI types (see supported-types for more information). You can use type-code->type-name to see the English name of the type.

extra - There are three disjoint sets of literals in RDF:

- "simple literals" -- a string.

- literals with datatypes

- literals with language annotations.

A literal cannot have both a language and a datatype; if a literal has type-name :literal-typed (i.e., its type-code is 2), then the extra value is a datatype URI; if its type-code is 3, then extra will be the language code. For some UPIs, including default graphs and blank nodes, the extra is an integer reserved for use by AllegroGraph.

Examples:

> (upi->value (value->upi 22 :byte))

22

18

nil

> (upi->value (upi (literal "hello" :language "en")))

"hello"

3

"en" Note that upi->value will signal an error if called on geospatial UPIs. Use upi->values instead.

See value->upi for additional information.

Returns the numeric value(s) encoded by upi.

The interpretation of this number varies with the UPI's type code:

- for numeric UPIs, the number is their value;

- for date, time, and date-time UPIs, the number is the extended universal time (time zone information is lost);

- for triple ID type UPIs, the number is the triple ID;

- for subscript UPIs, the number is the subscript value;

- for encoded IDs, the number is the encoded ID value as an integer;

- for blank nodes and other gensyms, the number is the UPI's value;

- for boolean UPIs, true will map to 1 and false will map to 0;

- for 2D Geospatial UPIs, the longitude and latitude are returned as two values of type double-float;

- other UPI types signal an error.

This decoding is independent from the value of db. That argument is accepted to be consistent with e.g. upi->value.

type-code of a UPI. This is a one-byte tag that describes how the rest of the bytes in the UPI should be interpreted. Some UPIs are hashed and their representation is stored in a string-table. Other UPIs encode their representation directly (see upi->value and value->upi for additional details).

Returns true if upi has type-code type-code.

upi- a UPItype-code- either a numeric UPI type-code or a keyword representing a type-code. See type-code->type-name and type-name->type-code for more information on type-codes and their translations.

upi-1 and upi-2 bytewise and return true if upi-1 is less than upi-2.

upi-1 and upi-2 bytewise and return true if upi-1 is less than or equal to upi-2.

thing appears to be a UPI. Recall that every UPI is a octet array 12 bytes in length but not every length 12 octet array is a UPI. It is possible, therefore, that upip will return true even if thing is not a UPI.

Returns a UPI that encodes value using type encode-as.

encode-as can be a type-code or a type-name (see supported-types and type-name->type-code for details. If a upi keyword argument is not supplied, then a new UPI will be created. See upi->value for information on retrieving the original value back from an encoded UPI.

The allowable value for value varies depending on the type into which it is being encoded. If the type is numeric, then value may be either a string representing a number in XSD format or a number. If the type is a date, time or dateTime, then value may be a string representing an XSD value of the appropriate type, or a number in extended universal time (this number will be assumed to have its timezone in GMT), or a cons whose car is a number representing an extended universal time and whose cdr is either t, nil, or an integer representing the timezone offset in minutes from GMT. If the cdr is nil, then the date or time will have no timezone information; if it is t, then the date or time will be in GMT. Here are some examples:

> (part->string (value->upi 2 :byte))

"2"^^<http://www.w3.org/2001/XMLSchema#byte>

> (part->string (value->upi "23.5e2" :double-float))

"2.35E3"^^<http://www.w3.org/2001/XMLSchema#double>

> (part->string (value->upi (get-universal-time) :time))

"18:36:54"^^<http://www.w3.org/2001/XMLSchema#time>

> (part->string (value->upi (cons (get-universal-time) -300) :time))

"13:37:08-05:00"^^<http://www.w3.org/2001/XMLSchema#time>

> (part->string (value->upi (cons (get-universal-time) nil) :date-time))

"2015-03-31T18:39:08"^^<http://www.w3.org/2001/XMLSchema#dateTime> Note the lack of timezone in the final example.

Note that value->upi can not create hashed UPIs (i.e., UPIs whose value must be stored in the string dictionary. To create these, use intern-resource and intern-literal.

Comparing parts of triples

triple is the same as part using part= for comparison. The part may be a UPI, a string (in N-Triples syntax) that can be converted to a UPI, or a future-part. See graph-upi= if you know that you will be comparing UPIs.

triple is the same as part using part= for comparison. The part may be a UPI, a string (in N-Triples syntax) that can be converted to a UPI, or a future-part. See object-upi= if you know that you will be comparing UPIs.

triple is the same as part using part= for comparison. The part may be a UPI, a string (in N-Triples syntax) that can be converted to a UPI, or a future-part. See predicate-upi= if you know that you will be comparing UPIs.

triple is the same as part using part= for comparison. The part may be a UPI, a string (in N-Triples syntax) that can be converted to a UPI, or a future-part. See subject-upi= if you know that you will be comparing UPIs.

triple is the same as upi using upi= for comparison. The upi must be a UPI. See graph-part= if you need to compare future-parts or convert strings into UPIs.

triple is the same as upi using upi= for comparison. The upi must be a UPI. See object-part= if you need to compare future-parts or convert strings into UPIs.

triple is the same as upi using upi= for comparison. The upi must be a UPI. See predicate-part= if you need to compare future-parts or convert strings into UPIs.

triple is the same as upi using upi= for comparison. The upi must be a UPI. See subject-part= if you need to compare future-parts or convert strings into UPIs.

Future-Parts and UPIs

Future-parts (which are discussed in detail in their own section) can take the place of UPIs in many of the functions above. For efficiencies sake, functions like upi= assume that they are called with actual UPIs. AllegroGraph provides more general functions for the UPI only variants when it makes sense to do so. For example, future-part= works only with future-parts whereas part= works equally well with any combination of UPIs, future-parts or even strings.

Return whatever is extra in the string of the future-part. The meaning of extra depends on the type of the part:

- resource - the prefix (if applicable)

- literal-typed - the datatype

- literal-language - the language

- other - the value will always be

nil

See future-part-type and future-part-value if you need to access the part's other parts.

Return the value of the future-part. The meaning of value depends on the type of the part:

- resource - the namespace (if any)

- literal-typed - the literal without the datatype

- literal-language - the literal without the language

- other - the value will always be

nil

See future-part-type and future-part-extra if you need to access the others parts (no pun intended) of the part.

Create a 'future' part that will intern itself in new triple stores as necessary.