Introduction

Large language models, or LLMs, are a type of AI that can mimic human intelligence. They use statistical models to analyze vast amounts of data, learning the patterns and connections between words and phrases.

AllegroGraph supports integration with Large Language Models.

A Vector Database organizes data through high-dimensional vectors. It is created by taking fragments of text, sending them to the Large Language Model (like OpenAI's GPT), and receiving back embedding vectors suitable for storing in AllegroGraph.

Supported models

Currently only the OpenAI GPT and the SERP API models are supported. Separate keys are required for each model.

The New WebView tool supports an LLM Playground where you can try out LLM queries which require the OpenAI API key. That tool makes it very easy to experiment with such LLM queries. The playground does not store the SERP API so it must be specified (as described below) with SERP API queries.

Magic properties and functions support interfacing with the models. section.

The default model for the llm:response, llm:askMyDocuments, and llm:askForTable magic properties (see Use of Magic predicates and functions) is gpt-4. You can select a different OpenAI model, for example gpt-3.5-turbo, by placing this form into the Allegraph initfile:

(setf gpt::openai-default-ask-chat-model "gpt-3.5-turbo") You need an API key to use any of the LLM predicates

The keys shown below are not valid OpenAI API or serpAPI keys. They are used to illustrate where your valid key will go.

To get an OpenAI API key go to the OpenAI website and sign up. To get a serpAPI key, go to https://serpapi.com/.

There are various ways to configure your API key, as a query option prefix or in the AllegroGraph configuration.

The actual openaiApiKey starts with sk- followed by a lot of numbers and letters. The serpApiKey is just a lot of numbers and letters. In the query option prefixes just below, the keys are preceded with franz: and the whole thing is wrapped in angle brackets. Similarly for the query options just below that.

As a query option prefix, write (the keys are not valid, replace with your valid key(s)):

PREFIX franzOption_openaiApiKey: <franz:sk-U01ABc2defGHIJKlmnOpQ3RstvVWxyZABcD4eFG5jiJKlmno>

PREFIX franzOption_serpApiKey: <franz:11111111111120b7c4d3d04a9837e3362edb2733318effec25c447445dfbf594> Syntax for config file which causes the option to be applied to all queries (see the Server Configuration and Control document for information on the config file):

QueryOption openaiApiKey=<franz:sk-U01ABc2defGHIJKlmnOpQ3RstvVWxyZABcD4eFG5jiJKlmno>

QueryOption serpApiKey=<franz:11111111111120b7c4d3d04a9837e3362edb2733318effec25c447445dfbf594> The first openaiApiKey option is applied to each query in the LLM Playground, but not the second serpApiKey.

You can also put the value the file data/settings/default-query-options file:

(("franzOption_openaiApiKey" "<franz:sk-U01ABc2defGHIJKlmnOpQ3RstvVWxyZABcD4eFG5jiJKlmno>"))

(("franzOption_serpApiKey" "<franz:11111111111120b7c4d3d04a9837e3362edb2733318effec25c447445dfbf594sk>"))

Remember your key is valuable (its use may cost money and others with the key can use it at your cost) so protect it.

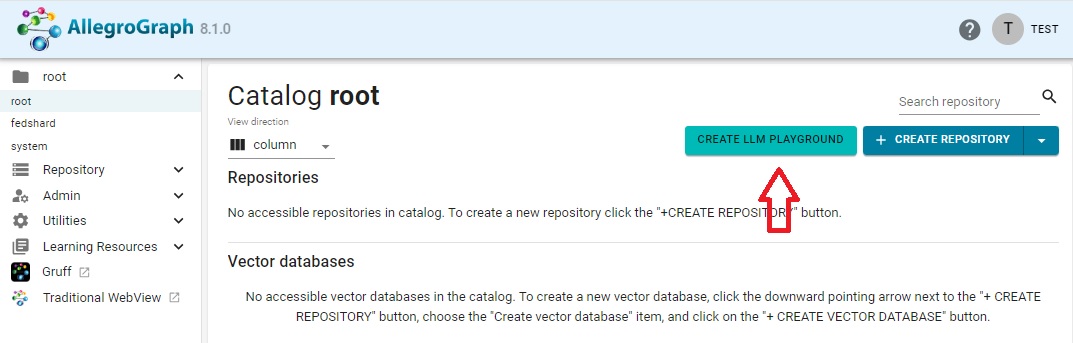

The New WebView LLM Playground prompts you for the OpenAI API key at the start of a session. First click on the Create LLM Playground button:

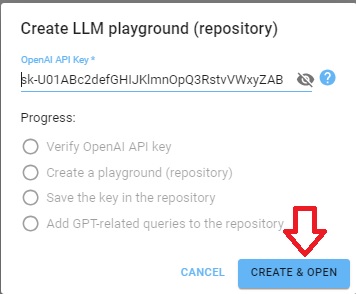

The following dialog comes up. Enter your OpenAI API key as shown (again that key is not actually valid):

Click Create & Open and a repo which automatically adds a PREFIX with the OpenAI API key to every query. (It does not add a PREFIX with the SERPApi API key. You must supply that yourself if you use the askSerp magic predicate. See the serpAPI example.

SPARQL LLM magic predicates (LLMagic)

AllegroGraph LLMagic supports LLM magic predicates (internal functions which act like SPARQL operators). These are listed in the SPARQL Magic Property document under the heading Large Language Models*. (You click on a listed magic property to see additional documentation.) See also LLMagic functions listed here.

For example the http://franz.com/ns/allegrograph/8.0.0/llm/response magic property prompts GPT for a response. For example, we can ask for a list of 5 US states:

PREFIX llm: <http://franz.com/ns/allegrograph/8.0.0/llm/>

SELECT * { ?response llm:response

"List 5 US States.". } which produces the response:

response

"California"

"Texas"

"Florida"

"New York"

"Pennsylvania" Vector databases

A vector database is a database that can store vectors (fixed-length lists of numbers) along with other data items. Vector databases typically implement one or more Approximate Nearest Neighbor (ANN) algorithms, so that one can search the database with a query vector to retrieve the closest matching database records. (Wikipedia)

Tools in AllegroGraph allow embedding natural language text obtained from sources like ChatGPT into a vector representation. A vector database in AllegroGraph is a table of embeddings and the associated original text. An Allegrograph Vector Database associates embeddings with literals found in the triple objects of a graph database.

Creating and using vector databases, along with examples, is described in the LLM Embed Specification document. There are several examples using vector databases in this AllegroGraph documentation. One using the writings of Noam Chomsky is shown here. Another allowing querying the United States Constitution is here. And a third, finding connections between historical figures here.

LLM Examples

See LLM Examples. The LLM Split Specification document also contains some examples. The LLM Embed Specification has some vector database examples.