Java Sesame API Tutorial for AllegroGraph

This is an introduction to the Java client API to the AllegroGraph RDFStore™ from Franz Inc.

The Java Sesame API offers convenient and efficient

access to an AllegroGraph server from a Java-based application. This API provides methods for

creating, querying and maintaining RDF data, and for managing the stored triples.

The Java Sesame API emulates the Eclipse RDF4J (formerly known as Aduna Sesame) API to make it easier to migrate from RDF4J to AllegroGraph.

Contents

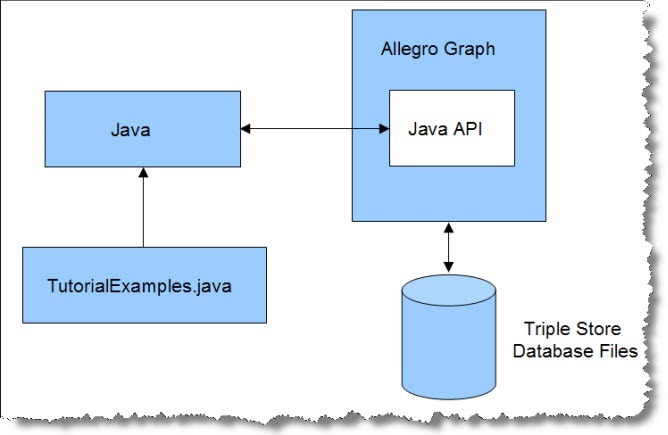

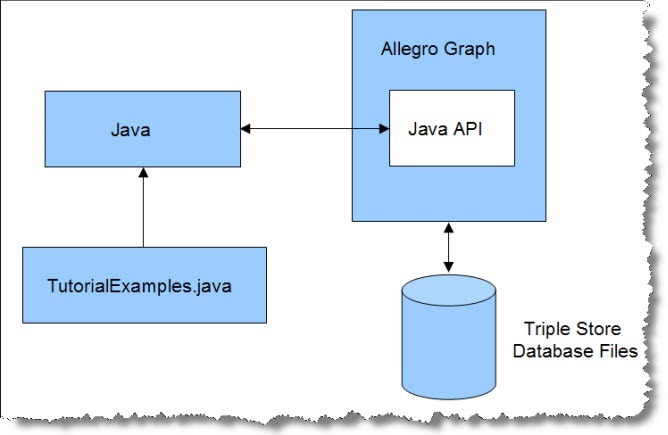

The Java client tutorial rests on a simple architecture involving AllegroGraph, disk-based data files, Java, and a file of Java examples called TutorialExamples.java.

The AllegroGraph Java client distribution contains the Java Sesame API.

The Java client communicates with the AllegroGraph Server through HTTP port 10035 in this tutorial. Java and AllegroGraph may be installed on the same computer, but in practice one server is shared by multiple clients running on different machines.

Load TutorialExamples.java into Java to view the tutorial examples. |

|

Each lesson in TutorialExamples.java is encapsulated in a Java method, named exampleN(), where N ranges from 0 to 21 (or more). The function names are referenced in the title of each section to make it easier to compare the tutorial text and the living code of the examples file.

The tutorial examples can be run on a Linux system, running AllegroGraph and the examples on the same computer ("localhost"). The tutorial assumes that AllegroGraph has been installed and configured using the procedure posted on this webpage.

We need to clarify some terminology before proceeding.

- "RDF" is the Resource Description Framework defined by the World Wide Web Consortium (W3C). It provides an elegantly simple means for describing multi-faceted resource objects and for linking them into complex relationship graphs. AllegroGraph Server creates, searches, and manages such RDF graphs.

- A "URI" is a Uniform Resource Identifier. It is a label used to uniquely identify various types of entities in an RDF graph. A typical URI looks a lot like a web address: http://example.org/project/class#number. In spite of the resemblance, a URI is not a web address. It is simply a unique label.

- A "triple" is a data statement, a "fact," stored in RDF format. It states that a resource has an attribute with a value. It consists of three fields:

- Subject: The first field contains the URI that uniquely identifies the resource that this triple describes.

- Predicate: The second field contains the URI identifying a property of this resource, such as its color or size, or a relationship between this resource and another one, such as parentage or ownership.

- Object: The third field is the value of the property. It could be a literal value, such as "red," or the URI of a linked resource.

- A "quad" is a triple with an added "context" field, which is used to divide the repository into "subgraphs." This context or subgraph is just a URI label that appears in the fourth field of related triples.

- A "quint" is a quad with a fifth field used for the "tripleID." AllegroGraph Server implements all triples as quints behind the scenes. The fourth and fifth fields are often ignored, however, so we speak casually of "triples," and sometimes of "quads," when it would be more rigorous to call them all "quints."

- A "resource description" is defined as a collection of triples that all have the same URI in the subject field. In other words, the triples all describe attributes of the same thing.

- A "statement" is a client-side Java object that describes a triple (quad, quint).

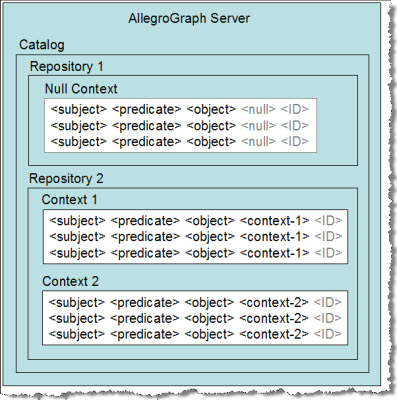

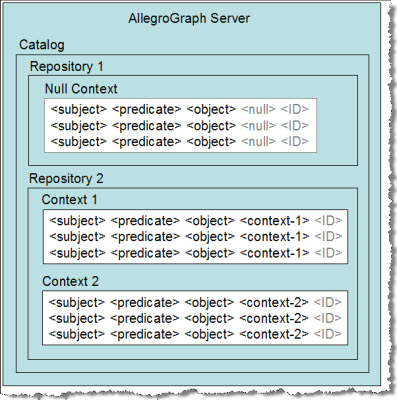

In the context of AllegroGraph Server:

- A "catalog" is a list of repositories owned by an AllegroGraph server.

- A "repository" is a collection of triples within a Catalog, stored and indexed on a hard disk.

- A "context" is a subgraph of the triples in a repository.

- If contexts are not in use, the triples are stored in the background (default) graph.

|

|

Creating Users with WebView Return to Top

Each connection to an AllegroGraph server runs under the credentials of a registered AllegroGraph user account.

Initial Superuser Account

The installation instructions for AllegroGraph advise you to create a default superuser called "test", with password "xyzzy". This is the user (and password) expected by the tutorial examples. If you created this account as directed, you can proceed to the next section and return to this topic at a later time when you need to create non-superuser accounts.

If you created a different superuser account you'll have to edit the TutorialExamples.java file before proceeding. Modify these entries near the top of the file:

static private final String USERNAME = "test";

static private final String PASSWORD = "xyzzy";

Otherwise you'll get an authentication failure when you attempt to connect to the server.

Users, Permissions, Access Rules, and Roles

AllegroGraph user accounts may be given any combination of the following three permissions:

- Superuser

- Start Session

- Evaluate Arbitrary Code

In addition, a user account may be given read, write or read/write access to individual repositories.

You can also define a role (such as "librarian") and give the role a set of permissions and access rules. Then you can assign several users to a shared role. This lets you manage their permissions and access by editing the role instead of the individual user accounts.

A superuser automatically has all possible permissions and unlimited access. A superuser can also create, manage and delete other user accounts. Non-superusers cannot view or edit account settings.

A user with the Start Sessions permission can use the AllegroGraph features that require spawning a dedicated session, such as Transactions and Social Network Analysis. If you try to use these features without the appropriate permission, you'll encounter authentication errors.

A user with permission to Evaluate Arbitrary Code can run Prolog Rule Queries.

This user can also do anything else that allows executing Lisp code, such as defining select-style generators, or doing eval-in-server, as well as loading server-side files.

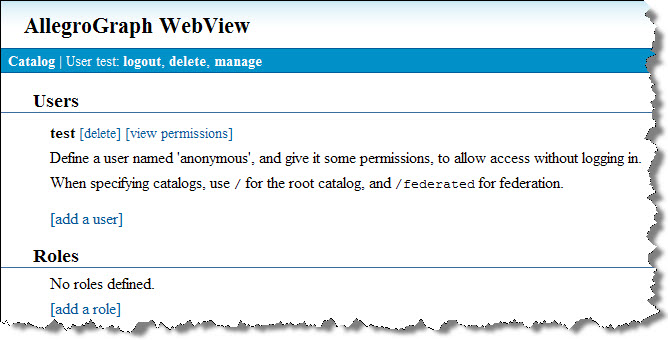

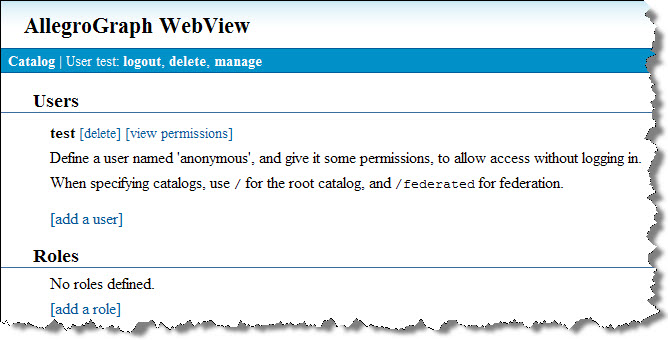

WebView

WebView is AllegroGraph's HTTP-based graphical user interface for user and repository management. It provides a SPARQL endpoint for querying your triple stores as well as various tools that let you create and maintain triple stores interactively.

To connect to WebView, simply direct your Web browser to the AllegroGraph port of your server. If you have installed AllegroGraph locally (and used the default port number), use:

http://localhost:10035

You will be asked to log in. Use the superuser credentials described in the previous section.

The first page of WebView is a summary of your catalogs, repositories, and federations. Click the user account link in the lower left corner of the page. This exposes the Users and Roles page.

This is the environment for creating and managing user accounts.

To create a new user, click the [add a user] link. This exposes a small form where you can enter the username (one symbol) and password. Click OK to save the new account.

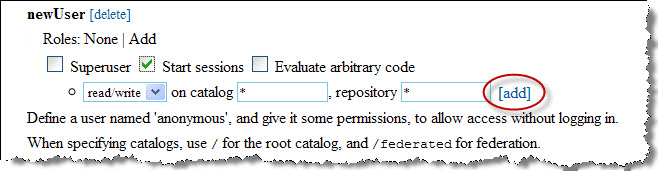

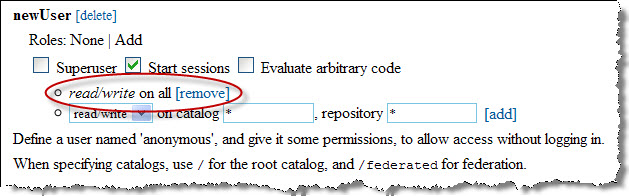

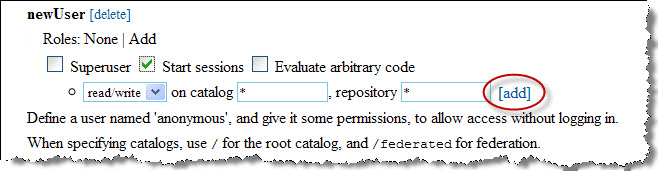

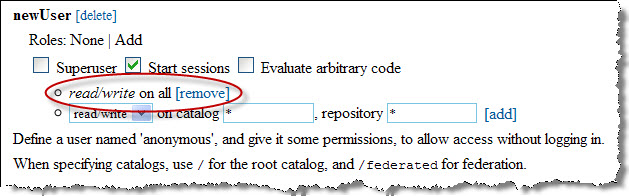

The new user will appear in the list of users. Click the [view permissions] link to open a control panel for the new user account:

Use the checkboxes to apply permissions to this account (superuser, start session, evaluate arbitrary code).

It is important that you set up access permissions for the new user. Use the form to create an access rule by selecting read, write or read/write access, naming a catalog (or * for all), and naming a repository within that catalog (or * for all). Click the [add] link. This creates an access rule for your new user. The access rule will appear in the permissions display:

This new user can log in and perform transactions on any repository in the system.

To repeat, the "test" superuser is all you need to run all of the tutorial examples. This section is for the day when you want to issue more modest credentials to some of your operators.

Creating a Repository and Triple Indices (example1()) Return to Top

The first task is to start our AllegroGraph Server and open a repository. This task is implemented in example1() from TutorialExamples.java.

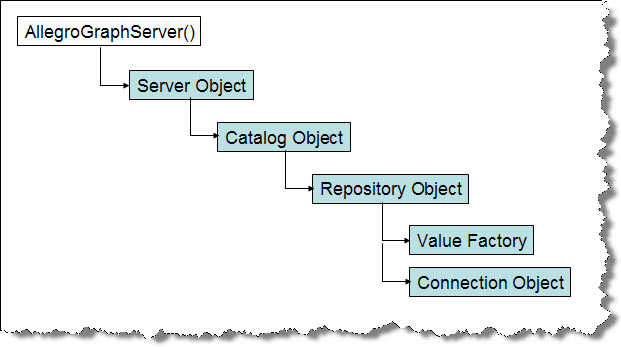

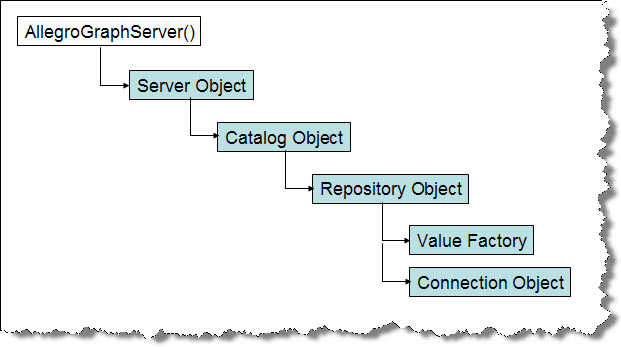

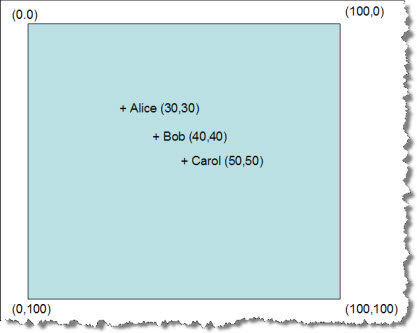

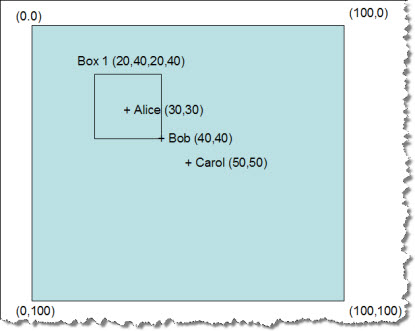

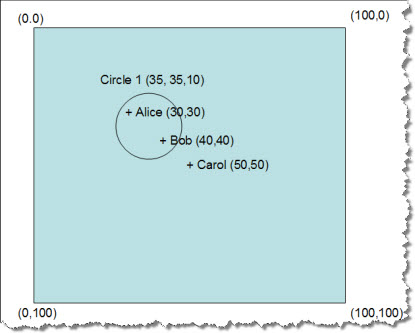

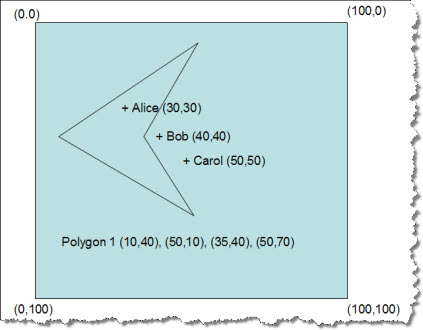

In example1() we build a chain of Java objects, ending in a "connection" object that lets us manipulate triples in a specific repository. The overall process of generating the connection object follows

this diagram:

The example1() function opens (or creates) a repository by building a series of client-side objects, culminating in a "connection" object. The connection object will be passed to other methods in TutorialExamples.java.

We will also make use of the repository's "value factory." |

|

The example first connects to an AllegroGraph Server by providing the endpoint (host IP address and port number) of an already-launched AllegroGraph server. You'll also need a user name and password. This creates a client-side server object, which can access the AllegroGraph server's list of available catalogs through the listCatalogs() method:

public class TutorialExamples {

private static final String SERVER_URL = "http://localhost:8080";

private static final String CATALOG_ID = "scratch";

private static final String REPOSITORY_ID = "javatutorial";

private static final String USERNAME = "test";

private static final String PASSWORD = "xyzzy";

private static final File DATA_DIR = new File(".");

private static final String FOAF_NS = "http://xmlns.com/foaf/0.1/";

/**

* Creating a Repository

*/

public static AGRepositoryConnection example1(boolean close) throws Exception {

// Tests getting the repository up.

println("\nStarting example1().");

AGServer server = new AGServer(SERVER_URL, USERNAME, PASSWORD);

println("Available catalogs: " + server.listCatalogs());

This is the output so far:

Starting example1().

Available catalogs: [/, java-catalog, python-catalog]

These examples use either the default root catalog (denoted as "/") or catalogs dedicated to specific tutorials.

In the next line of example1(), we use the server's getRootCatalog() method to create a client-side catalog object connected to AllegroGraph's default rootCatalog, as defined in the AllegroGraph configuration file. The catalog object has methods such as getCatalogName() and getAllRepositories() that we can use to investigate the catalogs on the AllegroGraph server. When we look inside the root catalog, we can see which repositories are available:

AGCatalog catalog = server.getRootCatalog();

println("Available repositories in catalog " +

(catalog.getCatalogName()) + ": " +

catalog.listRepositories());

The corresponding output lists the available repositories. (When you run the examples, you may see a different list of repositories.)

Available repositories in catalog /: [pythontutorial, javatutorial]

In the examples, we are careful to close open repositories and to delete previous state before continuing. We are just erasing the blackboard before starting a new lesson. You probably would not do this in your actual application:

closeAll();

catalog.deleteRepository(REPOSITORY_ID);

The next step is to create a client-side repository object representing the repository we wish to open, by calling the

createRepository() method of the catalog object. We have to provide the name of the desired repository (REPOSITORY_ID in this case, which is bound to the string "javatutorial").

AGRepository myRepository = catalog.createRepository(REPOSITORY_ID);

println("Got a repository.");

myRepository.initialize();

println("Initialized repository.");

println("Repository is writable? " + myRepository.isWritable());

A new or renewed repository must be initialized, using the initialize() method of the repository object. If you try to initialize a repository twice you get a warning message in the Java window but no exception. Finally we check to see that the repository is writable.

Got a repository.

Initialized repository.

Repository is writable? true

The goal of all this object-building has been to create a client-side repositoryConnection object, which we casually refer to as the "connection" or "connection object." The repository object's getConnection() method returns this connection object. The function closeBeforeExit() maintains a list of connection objects and automatically cleans them up when the client exits.

AGRepositoryConnection conn = myRepository.getConnection();

closeBeforeExit(conn);

println("Got a connection.");

println("Repository " + (myRepository.getRepositoryID()) +

" is up! It contains " + (conn.size()) +

" statements."

);

The size() method of the connection object returns how many triples are present. In the example1() function, this number should always be zero because we deleted and recreated the repository. This is the output in the Java window:

Got a connection.

Repository javatutorial is up! It contains 0 statements.

Whenever you create a new repository, you should stop to consider which kinds of triple indices you will need. This is an important efficiency decision. AllegroGraph uses a set of sorted indices to quickly identify a contiguous block of triples that are likely to match a specific query pattern.

These indices are identified by names that describe their organization. The default set of indices are called spogi, posgi, ospgi, gspoi, gposi, gospi, and i , where:

- S stands for the subject URI.

- P stands for the predicate URI.

- O stands for the object URI or literal.

- G stands for the graph URI.

- I stands for the triple identifier (its unique id number within the triple store).

The order of the letters denotes how the index has been organized. For instance, the spogi index contains all of the triples in the store, sorted first by subject, then by predicate, then by object, and finally by graph. The triple id number is present as a fifth column in the index. If you know the URI of a desired resource (the subject value of the query pattern), then the spogi index lets you retrieve all triples with that subject as a single block.

The idea is to provide your respository with the indices that your queries will need, and to avoid maintaining indices that you will never need.

We can use the connection object's listValidIndices() method to examine the list of all possible AllegroGraph triple indices:

List<String> indices = conn.listValidIndices();

println("All valid triple indices: " + indices);

This is the list of all possible valid indices:

All valid triple indices: [spogi, spgoi, sopgi, sogpi, sgpoi, sgopi, psogi,

psgoi, posgi, pogsi, pgsoi, pgosi, ospgi, osgpi, opsgi, opgsi, ogspi, ogpsi,

gspoi, gsopi, gpsoi, gposi, gospi, gopsi, i]

AllegroGraph can generate any of these indices if you need them, but it creates only seven indices by default. We can see the current indices by using the connection object's listIndices() method:

indices = conn.listIndices();

println("Current triple indices: " + indices);

There are currently seven indices:

Current triple indices: [i, gospi, gposi, gspoi, ospgi, posgi, spogi]

The indices that begin with "g" are sorted primarily by subgraph (or "context"). If you application does not use subgraphs, you should consider removing these indices from the repository. You don't want to build and maintain triple indices that your application will never use. This wastes CPU time and disk space. The connection object has a convenient dropIndex() method:

println("Removing graph indices...");

conn.dropIndex("gospi");

conn.dropIndex("gposi");

conn.dropIndex("gspoi");

indices = conn.listIndices();

println("Current triple indices: " + indices);

Having dropped three of the triple indices, there are now four remaining:

Removing graph indices...

Current triple indices: [i, ospgi, posgi, spogi]

The i index is for deleting triples by using the triple id number. The ospgi index is sorted primarily by object value, which makes it possible to grab a range of object values as a single block of triples from the index. Similarly, the posgi index lets us reach for a block of triples that all share the same predicate. We mentioned previously that the spogi index lets us retrieve blocks of triples that all have the same subject URI.

As it happens, we may have been overly hasty in eliminating all of the graph indices. AllegroGraph can find the right matches as long as there is any one index present, but using the "right" index is much faster. Let's put one of the graph indices back, just in case we need it. We'll use the connection object's addIndex() method:

println("Adding one graph index back in...");

conn.addIndex("gspoi");

indices = conn.listIndices();

println("Current triple indices: " + indices);

Adding one graph index back in...

Current triple indices: [i, gspoi, ospgi, posgi, spogi]

In its default mode, example1() closes the connection. It can optionally return the connection when called by another method, as will occur in several examples below. If you are done with the connection, closing it and shutting it down will free resources.

if (close) {

conn.close();

myRepository.shutDown();

return null;

}

return conn;

}

Asserting and Retracting Triples (example2()) Return to Top

In example2(), we show how

to create resources describing two

people, Bob and Alice, by asserting individual triples into the repository. The example also retracts and replaces a triple. Assertions and retractions to the triple store

are executed by 'add' and 'remove' methods belonging to the connection object, which we obtain by calling the example1() function (described above).

Before asserting a triple, we have to generate the URI values for the subject, predicate and object fields. The Java Sesame API to AllegroGraph Server predefines a number of classes and predicates for the RDF, RDFS, XSD, and OWL ontologies. RDF.TYPE is one of the predefined predicates we will use.

The 'add' and 'remove' methods take an optional 'contexts' argument that

specifies one or more subgraphs that are the target of triple assertions

and retractions. When the context is omitted, triples are asserted/retracted

to/from the background graph. In the example below, facts about Alice and Bob

reside in the background graph.

The example2() function begins by calling example1() to create the appropriate connection object, which is bound to the variable conn. We will also need the repository's "value factory" object, because it has many useful methods. If we have the connection object, we can retrieve its repository object, and then the value factory. We will need both objects in order to proceed.

public static AGRepositoryConnection example2(boolean close) throws RepositoryException {

// Asserts some statements and counts them.

AGRepositoryConnection conn = example1(false);

AGValueFactory vf = conn.getRepository().getValueFactory();

println("Starting example example2().");

The next step is to begin assembling the URIs we will need for the new triples. The valueFactory's createURI() method generates a URI from a string. These are the subject URIs identifying the resources "Bob" and "Alice":

URI alice = vf.createURI("http://example.org/people/alice");

URI bob = vf.createURI("http://example.org/people/bob");

Both Bob and Alice will have a "name" attribute.

URI name = vf.createURI("http://example.org/ontology/name");

Bob and Alice will both be rdf:type "Person". Note that this is the name of a class, and is therefore capitalized.

URI person = vf.createURI("http://example.org/ontology/Person");

The name attributes will contain literal values. We have to generate the Literal objects from strings:

Literal bobsName = vf.createLiteral("Bob");

Literal alicesName = vf.createLiteral("Alice");

The next line prints out the number of triples currently in the repository.

println("Triple count before inserts: " +

(conn.size()));

Triple count before inserts: 0

Now we assert four triples, two for Bob and two more for Alice, using the connection object's add() method. Note the use of RDF.TYPE, which is an attribute of the RDF object in

org.eclipse.rdf4j.model.vocabulary. This attribute is set the the URI of the rdf:type predicate, which is used to indicate the class of a resource.

// Alice's name is "Alice"

conn.add(alice, name, alicesName);

// Alice is a person

conn.add(alice, RDF.TYPE, person);

//Bob's name is "Bob"

conn.add(bob, name, bobsName);

//Bob is a person, too.

conn.add(bob, RDF.TYPE, person);

After the assertions, we count triples again (there should be four) and print out the triples for inspection. The "null" arguments to the getStatements() method say that we don't want to restrict what values may be present in the subject, predicate, object or context positions. Just print out all the triples.

println("Triple count after inserts: " +

(conn.size()));

RepositoryResult<Statement> result = conn.getStatements(null, null, null, false);

while (result.hasNext()) {

Statement st = result.next();

println(st);

}

This is the output at this point. We see four triples, two about Alice and two about Bob:

Triple count after inserts: 4

(http://example.org/people/alice, http://example.org/ontology/name, "Alice") [null]

(http://example.org/people/alice, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [null]

(http://example.org/people/bob, http://example.org/ontology/name, "Bob") [null]

(http://example.org/people/bob, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [null]

We see two resources of type "person," each with a literal name. The [null] value at the end of each triple indicates that the triple is resident in the default background graph, rather than being assigned to a specific named subgraph.

The next step is to demonstrate how to remove a triple. Use the remove() method of the connection object, and supply a triple pattern that matches the target triple. In this case we want to remove Bob's name triple from the repository. Then we'll count the triples again to verify that there are only three remaining. Finally, we re-assert Bob's name so we can use it in subsequent examples, and we'll return the connection object.

conn.remove(bob, name, bobsName);

println("Removed one triple.");

println("Triple count after deletion: " +

(conn.size()));

Removed one triple.

Triple count after deletion: 3

Example2() ends with a condition that either closes the connection or passes it on to the next method for reuse.

A SPARQL Query (example3()) Return to Top

SPARQL stands for the "SPARQL Protocol and RDF Query Language," a recommendation of the World Wide Web Consortium (W3C). SPARQL is a query language for retrieving RDF triples.

Our next example illustrates how to evaluate a SPARQL query. This is the simplest query, the one that returns all triples. Note that example3() continues with the four triples created in example2().

public static void example3() throws Exception {

AGRepositoryConnection conn = example2(false);

println("\nStarting example3().");

try {

String queryString = "SELECT ?s ?p ?o WHERE {?s ?p ?o .}";

The SELECT clause returns the variables ?s, ?p and ?o. The variables are bound to the subject, predicate and object values of each triple that satisfies the WHERE clause. In this case the WHERE clause is unconstrained. The dot (.) in the fourth position signifies the end of the pattern.

The connection object's prepareTupleQuery() method

creates a query object that can be evaluated one or more times. (A "tuple" is an ordered sequence of data elements.) The results are returned in a TupleQueryResult iterator that gives access to a sequence of bindingSets.

AGTupleQuery tupleQuery = conn.prepareTupleQuery(QueryLanguage.SPARQL, queryString);

TupleQueryResult result = tupleQuery.evaluate();

Below we illustrate one method for extracting the values

from a binding set, indexed by the name of the corresponding column variable

in the SELECT clause.

try {

while (result.hasNext()) {

BindingSet bindingSet = result.next();

Value s = bindingSet.getValue("s");

Value p = bindingSet.getValue("p");

Value o = bindingSet.getValue("o");

System.out.format("%s %s %s\n", s, p, o);

}

http://example.org/people/alice http://www.w3.org/1999/02/22-rdf-syntax-ns#type http://example.org/ontology/Person

http://example.org/people/alice http://example.org/ontology/name "Alice"

http://example.org/people/bob http://www.w3.org/1999/02/22-rdf-syntax-ns#type http://example.org/ontology/Person

http://example.org/people/bob http://example.org/ontology/name "Bob"

If one wants only the number of results, using the count() method is more efficient than using evaluate() and counting the returned results client-side. The repositoryConnection class is designed to be created for the duration of a sequence of updates and queries, and then closed. In practice, many AllegroGraph applications keep a connection open indefinitely. However, best practice dictates that the connection should be closed, as illustrated below. The same hygiene applies to the iterators that generate binding sets.

} finally {

result.close();

}

// Just the count now. The count is done server-side,

// and only the count is returned.

long count = tupleQuery.count();

println("count: " + count);

} finally {

conn.close();

}

Statement Matching (example4()) Return to Top

The getStatements() method of the connection object provides a simple way to perform unsophisticated queries. This method lets you enter a mix of required values and wildcards, and retrieve all matching triples. (If you need to perform sophisticated tests and comparisons you should use the SPARQL query instead.)

This is the example4() function of TutorialExamples.java. It begins by calling example2() to create a connection object and populate the javarepository with four triples describing Bob and Alice.

public static void example4() throws RepositoryException {

RepositoryConnection conn = example2(false);

closeBeforeExit(conn);

We're going to search for triples that mention Alice, so we have to create an "Alice" URI to use in the search pattern. This requires us to build the bridge from the connection back to the valueFactory:

Repository myRepository = conn.getRepository();

URI alice = myRepository.getValueFactory().createURI("http://example.org/people/alice");

Now we search for triples with Alice's URI in the subject position. The "null" values are wildcards for the predicate and object positions of the triple.

RepositoryResult<Statement> statements = conn.getStatements(alice, null, null, false);

The getStatements() method returns a repositoryResult object (bound to the variable "statements" in this case). This object can be iterated over, exposing one result statement at a time. It is sometimes desirable to screen the results for duplicates, using the enableDuplicateFilter() method. Note, however, that duplicate filtering can be expensive. Our example does not contain any duplicates, but it is possible for them to occur.

try {

statements.enableDuplicateFilter();

while (statements.hasNext()) {

println(statements.next());

}

This prints out the two matching triples for "Alice."

(http://example.org/people/alice, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [null]

(http://example.org/people/alice, http://example.org/ontology/name, "Alice") [null]

At this point it is good form to close the repositoryResponse object because it occupies memory and is rarely reused in most programs. We can also close the connection and shut down the repository.

} finally {

statements.close();

}

conn.close();

myRepository.shutDown();

}

Literal Values (example5()) Return to Top

The next example, example5(), illustrates some variations on what we have seen so far. The example creates and asserts typed and plain literal values, including language-specific plain literals, and then conducts searches for them in three ways:

- getStatements() search, which is an efficient way to match a single triple pattern.

- SPARQL direct match, for efficient multi-pattern search.

- SPARQL filter match, for sophisticated filtering such as performing range matches.

The getStatements() and SPARQL direct searches return exactly the datatype you ask for. The SPARQL filter queries can sometimes return multiple datatypes. This behavior will be one focus of this section.

If you are not explicit about the datatype of a value, either when asserting the triple or when writing a search pattern, AllegroGraph will deduce an appropriate datatype and use it. This is another focus of this section. This helpful behavior can sometimes surprise you with unanticipated results.

Setup

Example5() begins by obtaining a connection object from example1(), and then clears the repository of all existing triples.

public static void example5() throws Exception {

RepositoryConnection conn = example2(false);

Repository myRepository = conn.getRepository();

ValueFactory f = myRepository.getValueFactory();

println("\nStarting example5().");

conn.clear();

For sake of coding efficiency, it is good practice to create variables

for namespace strings. We'll use this namespace again and again in the

following lines. We have made the URIs in this example very short to

keep the result displays compact.

String exns = "http://people/";

The example creates new resources describing seven people, named

alphabetically from Alice to Greg. These are URIs to use in the

subject field of the triples. The example shows how to enter a full

URI string (Alice through Dave), or alternately how to combine a

namespace with a local resource name (Eric through Greg).

URI alice = f.createURI("http://people/alice");

URI bob = f.createURI("http://people/bob");

URI carol = f.createURI("http://people/carol");

URI dave = f.createURI("http://people/dave");

URI eric = f.createURI(exns, "eric");

URI fred = f.createURI(exns, "fred");

URI greg = f.createURI(exns "greg");

Numeric Literal Values

This section explores the behavior of numeric literals.

Asserting Numeric Data

The first section assigns ages to the participants, using a variety of numeric types. First we need a URI for the "age" predicate.

URI age = f.createURI(exns, "age");

The next step is to create a variety of values representing ages. Coincidentally, these people are all 42 years old, but we're going to record that information in multiple ways:

Literal fortyTwo = f.createLiteral(42); // creates int

Literal fortyTwoDecimal = f.createLiteral(42.0); // creates float

Literal fortyTwoInt = f.createLiteral("42", XMLSchema.INT);

Literal fortyTwoLong = f.createLiteral("42", XMLSchema.LONG);

Literal fortyTwoFloat = f.createLiteral("42", XMLSchema.FLOAT);

Literal fortyTwoString = f.createLiteral("42", XMLSchema.STRING);

Literal fortyTwoPlain = f.createLiteral("42"); // creates plain literal

In four of these statements, we explicitly identified the datatype of the value in order to create an INT, a LONG, a DOUBLE and a STRING. This is the best practice.

In three other statements, we just handed AllegroGraph numeric-looking values to see what it would do with them. As we will see in a moment, 42 creates an INT, 42.0 becomes into a DOUBLE, and "42" becomes a "plain" (untyped) literal value. (Note that plain literals are not quite the same thing as typed literal strings. A search for a plain literal will not always match a typed string, and vice versa.)

Now we need to assemble the URIs and values into statements (which are client-side triples):

Statement stmt1 = f.createStatement(alice, age, fortyTwo);

Statement stmt2 = f.createStatement(bob, age, fortyTwoDecimal);

Statement stmt3 = f.createStatement(carol, age, fortyTwoInt);

Statement stmt4 = f.createStatement(dave, age, fortyTwoLong);

Statement stmt5 = f.createStatement(eric, age, fortyTwoFloat);

Statement stmt6 = f.createStatement(fred, age, fortyTwoString);

Statement stmt7 = f.createStatement(greg, age, fortyTwoPlain);

And then add the statements to the triple store on the AllegroGraph server. We can use either add() or addStatement() for this purpose.

conn.add(stmt1);

conn.add(stmt2);

conn.add(stmt3);

conn.add(stmt4);

conn.add(stmt5);

conn.add(stmt6);

conn.add(stmt7);

Now we'll complete the round trip to see what triples we get back from these assertions. This is how we use getStatements() in this example to retrieve and display age triples for us:

println("\nShowing all age triples using getStatements(). Seven matches.");

RepositoryResult<Statement> statements = conn.getStatements(null, age, null, false);

try {

while (statements.hasNext()) {

println(statements.next());

}

} finally {

statements.close();

}

This loop prints all age triples to the interaction window. Note that the retrieved triples are of six types: two ints, a long, a float, a double, a long, a string, and a "plain literal." All of them say that their person's age is 42. Note that the triple for Greg has the plain literal value "42", while the triple for Fred uses "42" as a string.

Showing all age triples using getStatements(). Seven matches.

(http://people/greg, http://people/age, "42") [null]

(http://people/fred, http://people/age, "42"^^<http://www.w3.org/2001/XMLSchema#string>) [null]

(http://people/eric, http://people/age, "4.2E1"^^<http://www.w3.org/2001/XMLSchema#float>) [null]

(http://people/dave, http://people/age, "42"^^<http://www.w3.org/2001/XMLSchema#long>) [null]

(http://people/carol, http://people/age, "42"^^<http://www.w3.org/2001/XMLSchema#int>) [null]

(http://people/bob, http://people/age, "4.2E1"^^<http://www.w3.org/2001/XMLSchema#double>) [null]

(http://people/alice, http://people/age, "42"^^<http://www.w3.org/2001/XMLSchema#int>) [null]

If you ask AllegroGraph for a specific datatype, you will get it. If you leave the decision up to AllegroGraph, you might get something unexpected such as an plain literal value.

Matching Numeric Data

This section explores getStatements() and SPARQL matches against numeric triples.

Match 42. In the first example, we asked AllegroGraph to find an untyped number, 42.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, 42, false) |

Illegal argument. |

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p 42 .} |

No matches. |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = 42)} |

"42"^^<http://www.w3.org/2001/XMLSchema#int>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#float>

"42"^^<http://www.w3.org/2001/XMLSchema#long>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#double> |

The getStatements() query cannot accept a text input parameter, so that experiment won't run. The SPARQL direct match didn't know how to interpret the untyped value and found zero matches. The SPARQL filter match, however, opened the doors to matches of multiple numeric types, and returned ints, floats, longs and doubles.

"Match 42.0" without explicitly declaring the number's type.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, 42.0, false) |

Illegal argument. |

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p 42.0 .} |

No direct matches. |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = 42.0)} |

"42"^^<http://www.w3.org/2001/XMLSchema#int>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#float>

"42"^^<http://www.w3.org/2001/XMLSchema#long>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#double> |

The getStatements() method cannot accept this input. The filter match returned all numeric types that were equal to 42.0.

"Match '42'^^<http://www.w3.org/2001/XMLSchema#int>." Note that we have to use a variable (fortyTwoInt) bound to a Literal value in order to offer this int to getStatements(). We can't just type the value into the getStatements() method directly.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, fortyTwoInt, false) |

"42"^^<http://www.w3.org/2001/XMLSchema#int>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "42"^^<http://www.w3.org/2001/XMLSchema#int>} |

"42"^^<http://www.w3.org/2001/XMLSchema#int> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "42"^^<http://www.w3.org/2001/XMLSchema#int>)} |

"42"^^<http://www.w3.org/2001/XMLSchema#int>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#float>

"42"^^<http://www.w3.org/2001/XMLSchema#long>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#double> |

Both the getStatements() query and the SPARQL direct query returned exactly what we asked for: ints. The filter match returned all numeric types that matches in value.

"Match '42'^^<http://www.w3.org/2001/XMLSchema#long>." Again we need a bound variable to offer a Literal value to getStatements().

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, fortyTwoLong, false) |

"42"^^<http://www.w3.org/2001/XMLSchema#long>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "42"^^<http://www.w3.org/2001/XMLSchema#long>} |

"42"^^<http://www.w3.org/2001/XMLSchema#long> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "42"^^<http://www.w3.org/2001/XMLSchema#long>)} |

"42"^^<http://www.w3.org/2001/XMLSchema#int>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#float>

"42"^^<http://www.w3.org/2001/XMLSchema#long>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#double> |

Both the getStatements() query and the SPARQL direct query returned longs. The filter match returned all numeric types.

"Match '42'^^<http://www.w3.org/2001/XMLSchema#double>."

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, fortyTwoDouble, false) |

"42"^^<http://www.w3.org/2001/XMLSchema#double>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "42"^^<http://www.w3.org/2001/XMLSchema#double>} |

"42"^^<http://www.w3.org/2001/XMLSchema#double> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "42"^^<http://www.w3.org/2001/XMLSchema#double>)} |

"42"^^<http://www.w3.org/2001/XMLSchema#int>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#float>

"42"^^<http://www.w3.org/2001/XMLSchema#long>

"4.2E1"^^<http://www.w3.org/2001/XMLSchema#double> |

Both the getStatements() query and the SPARQL direct query returned doubles. The filter match returned all numeric types.

Matching Numeric Strings and Plain Literals

At this point we are transitioning from tests of numeric matches to tests of string matches, but there is a gray zone to be explored first. What do we find if we search for strings that contain numbers? In particular, what about "plain literal" values that are almost, but not quite, strings?

"Match '42'^^<http://www.w3.org/2001/XMLSchema#string>." This example asks for a typed string to see if we get any numeric matches back.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, fortyTwoString, false) |

"42"^^<http://www.w3.org/2001/XMLSchema#string>

It did not match the plain literal.

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "42"^^<http://www.w3.org/2001/XMLSchema#string>} |

"42"^^<http://www.w3.org/2001/XMLSchema#string>

"42" This is the plain literal value. |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "42"^^<http://www.w3.org/2001/XMLSchema#string>)} |

"42"^^<http://www.w3.org/2001/XMLSchema#string>

"42" This is the plain literal value. |

The getStatements() query matched a literal string only. The SPARQL queries returned matches that were both typed strings and plain literals. There were no numeric matches.

"Match plain literal '42'." This example asks for a plain literal to see if we get any numeric matches back.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, fortyTwoPlain, false) |

"42" This is the plain literal. It did not match the string.

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "42"} |

"42"^^<http://www.w3.org/2001/XMLSchema#string>

"42" This is the plain literal value. |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "42")} |

"42"^^<http://www.w3.org/2001/XMLSchema#string>

"42" This is the plain literal value. |

The getStatements() query matched the plain literal only, and did not match the string. The SPARQL queries returned matches that were both typed strings and plain literals. There were no numeric matches.

The interesting lesson here is that AllegroGraph distinguishes between strings and plain literals when you use getStatements(), but it lumps them together when you use SPARQL.

Matching Strings

In this section we'll set up a variety of string triples and experiment with matching them using getStatements() and SPARQL. Note that Free Text Search is a different topic. In this section we're doing simple matches of whole strings.

Asserting String Values

We're going to add a "favorite color" attribute to five of the person resources we have used so far. First we need a predicate.

URI favoriteColor = f.createURI(exns, "favoriteColor");

Now we'll create a variety of string values, and a single "plain literal" value.

Literal UCred = f.createLiteral("Red");

Literal LCred = f.createLiteral("red");

Literal RedPlain = f.createLiteral("Red");

Literal rouge = f.createLiteral("rouge", XMLSchema.STRING);

Literal Rouge = f.createLiteral("Rouge", XMLSchema.STRING);

Literal RougePlain = f.createLiteral("Rouge");

Literal FrRouge = f.createLiteral("Rouge", "fr");

Note that in the last line we created a plain literal and assigned it a French language tag. You cannot assign a language tag to strings, only to plain literals. See typed and plain literal values for the specification.

Next we'll add these values to new triples in the triple store.

conn.add(alice, favoriteColor, UCred);

conn.add(bob, favoriteColor, LCred);

conn.add(carol, favoriteColor, RedPlain);

conn.add(dave, favoriteColor, rouge);

conn.add(eric, favoriteColor, Rouge);

conn.add(fred, favoriteColor, RougePlain);

conn.add(greg, favoriteColor, FrRouge);

If we run a getStatements() query for all favoriteColor triples, we get these values returned:

Showing all color triples using getStatements(). Should be seven.

(http://people/greg, http://people/favoriteColor, "Rouge"@fr) [null]

(http://people/fred, http://people/favoriteColor, "Rouge") [null]

(http://people/eric, http://people/favoriteColor, "Rouge"^^<http://www.w3.org/2001/XMLSchema#string>) [null]

(http://people/dave, http://people/favoriteColor, "rouge"^^<http://www.w3.org/2001/XMLSchema#string>) [null]

(http://people/carol, http://people/favoriteColor, "Red") [null]

(http://people/bob, http://people/favoriteColor, "red"^^<http://www.w3.org/2001/XMLSchema#string>) [null]

(http://people/alice, http://people/favoriteColor, "Red"^^<http://www.w3.org/2001/XMLSchema#string>) [null]

That's four typed strings, capitalized and lower case, plus three plain literals, one with a language tag.

Matching String Data

First let's search for "Red" without specifying a datatype.

"Match 'Red'." What happens if we search for "Red" without specifying a string datatype?

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, "Red", false) |

Illegal value.

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "Red"} |

"Red"^^<http://www.w3.org/2001/XMLSchema#string>

"Red" This is the plain literal value. |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "Red")} |

"Red"^^<http://www.w3.org/2001/XMLSchema#string>

"Red" This is the plain literal value. |

The getStatements() query cannot accept the "Red" argument and cannot run. The SPARQL queries matched both "Red" typed strings and "Red" plain literals, but they did not return the lower case "red" triple. The match was liberal regarding datatype but strict about case.

Let's try "Rouge".

"Match 'Rouge'." What happens if we search for "Rouge" without specifying a string datatype or language? Will it match the triple with the French tag?

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, "Rouge", false) |

Illegal.

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "Rouge"} |

"Rouge"^^<http://www.w3.org/2001/XMLSchema#string>

"Rouge" This is the plain literal value.

Did not match the "Rouge"@fr triple. |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "Rouge")} |

"Rouge"^^<http://www.w3.org/2001/XMLSchema#string>

"Rouge" This is the plain literal value.

Did not match the"Rouge"@fr triple. |

The getStatements() query could not proceed because of the illegal argument. The SPARQL queries matched both "Rouge" typed strings and "Rouge" plain literals, but they did not return the "Rouge"@fr triple. The match was liberal regarding datatype but strict about language. We didn't ask for French, so we didn't get French.

"Match 'Rouge'@fr." What happens if we search for "Rouge"@fr? We'll have to bind the value to a variable (FrRouge) to use getStatements(). We can type the value directly into the SPARQL queries.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, age, FrRouge, false) |

"Rouge"@fr

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "Rouge"@fr} |

"Rouge"@fr |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "Rouge"@fr)} |

"Rouge"@fr |

If you ask for a specific language, that's exactly what you are going

to get, in all three types of queries.

You may be wondering how to perform a string match where language and

capitalization don't matter. You can do that with a SPARQL filter

query using the str() function, which returns the

string portion of a literal, without the datatype or language tag. So

applied to the following, str() returns

"Rouge":

"Rouge"^^<http://www.w3.org/2001/XMLSchema#string>

"Rouge"

"Rouge"@fr

Then the lowercase() function eliminates case

issues:

PREFIX fn: <http://www.w3.org/2005/xpath-functions#>

SELECT ?s ?p ?o

WHERE {?s ?p ?o . filter (fn:lower-case(str(?o)) = "rouge")}

This query returns a variety of "Rouge" triples:

http://people/dave http://people/favoriteColor "rouge"^^<http://www.w3.org/2001/XMLSchema#string>

http://people/eric http://people/favoriteColor "Rouge"^^<http://www.w3.org/2001/XMLSchema#string>

http://people/fred http://people/favoriteColor "Rouge"

http://people/greg http://people/favoriteColor "Rouge"@fr

This query matched all triples containing the string "rouge" regardless of datatype or language tag. Remember that the SPARQL "filter" queries are powerful, but they are also the slowest queries. SPARQL direct queries and getStatements() queries are faster.

Matching Booleans

In this section we'll assert and then search for Boolean values.

Asserting Boolean Values

We'll be adding a new attribute to the person resources in our example. Are they, or are they not, seniors?

URI senior = f.createURI(exns, "senior");

The correct way to create Boolean values for use in triples is to create literal values of type Boolean:

Literal trueValue = f.createLiteral("true", XMLSchema.BOOLEAN);

Literal falseValue = f.createLiteral("false", XMLSchema.BOOLEAN);

Note that "true" and "false" must be lower case.

We'll only need two triples:

conn.add(alice, senior, trueValue);

conn.add(bob, senior, falseValue);

When we retrieve the triples (using getStatements()) we see:

(http://people/bob, http://people/senior, "false"^^<http://www.w3.org/2001/XMLSchema#boolean>) [null]

(http://people/alice, http://people/senior, "true"^^<http://www.w3.org/2001/XMLSchema#boolean>) [null]

These are RDF-legal Boolean values that work with the AllegroGraph query engine.

"Match 'true'." There are three correct ways to perform a Boolean search. One is to use the varible trueValue (defined above) to pass a Boolean literal value to getStatements(). SPARQL queries will recognize true and false, and of course the fully-typed "true"^^<http://www.w3.org/2001/XMLSchema#boolean> format is also respected by SPARQL:

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, senior, trueValue, false) |

"true"^^<http://www.w3.org/2001/XMLSchema#boolean>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p true} |

"true"^^<http://www.w3.org/2001/XMLSchema#boolean> |

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "true"^^<http://www.w3.org/2001/XMLSchema#boolean> |

"true"^^<http://www.w3.org/2001/XMLSchema#boolean> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = true)} |

"true"^^<http://www.w3.org/2001/XMLSchema#boolean> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "true"^^<http://www.w3.org/2001/XMLSchema#boolean>} |

"true"^^<http://www.w3.org/2001/XMLSchema#boolean> |

All of these queries correctly match Boolean values.

In the following example, we use getStatements() to match a DATE object. We have used a DATE literal in the object position of the triple pattern:

println("Retrieve triples matching DATE object.");

RepositoryResult<Statement> statements = conn.getStatements(null, null, date, false);

try {

while (statements.hasNext()) {

println(statements.next());

}

} finally {

statements.close();

}

Retrieve triples matching DATE object.

(http://example.org/people/alice, http://example.org/people/birthdate, "1984-12-06"^^<http://www.w3.org/2001/XMLSchema#date>) [null]

Note the string representation of the DATE object in the following query.

RepositoryResult<Statement> statements = conn.getStatements(null, null,

f.createLiteral("\"1984-12-06\"^^<http://www.w3.org/2001/XMLSchema#date>"), false);

Match triples having specific DATE value.

(<http://example.org/people/alice>, <http://example.org/people/birthdate>, "1984-12-06"^^<http://www.w3.org/2001/XMLSchema#date>)

Let's try the same experiment with DATETIME:

RepositoryResult<Statement> statements = conn.getStatements(null, null, time, false);

Retrieve triples matching DATETIME object.

(http://example.org/people/ted, http://example.org/people/birthdate, "1984-12-06T09:00:00"^^<http://www.w3.org/2001/XMLSchema#dateTime>) [null]

And a DATETIME match without using a literal value object:

RepositoryResult<Statement> statements = conn.getStatements(null, null,

f.createLiteral("\"1984-12-06T09:00:00\"^^<http://www.w3.org/2001/XMLSchema#dateTime>"), false);

Match triples having a specific DATETIME value.

(http://example.org/people/ted, http://example.org/people/birthdate, "1984-12-06T09:00:00"^^<http://www.w3.org/2001/XMLSchema#dateTime>) [null]

Dates, Times and Datetimes

In this final section of example5(), we'll assert and retrieve dates, times and datetimes.

In this context, you might be surprised by the way that AllegroGraph handles time zone data. If you assert (or search for) a timestamp that includes a time-zone offset, AllegroGraph will "normalize" the expression to Greenwich (zulu) time before proceeding. This normalization greatly speeds up searching and happens transparently to you, but you'll notice that the matched values are all zulu times.

Asserting Date, Time and Datetime Values

We're going to add birthdates to our personnel records. We'll need a birthdate predicate:

URI birthdate = f.createURI(exns, "birthdate");

We'll also need four types of literal values: a date, a time, a datetime, and a datetime with a time-zone offset.

Literal date = f.createLiteral("1984-12-06", XMLSchema.DATE);

Literal datetime = f.createLiteral("1984-12-06T09:00:00", XMLSchema.DATETIME);

Literal time = f.createLiteral("09:00:00", XMLSchema.TIME);

Literal datetimeOffset = f.createLiteral("1984-12-06T09:00:00+01:00", XMLSchema.DATETIME);

It is interesting to notice that these literal values print out exactly as we defined them.

Printing out Literals for date, datetime, time, and datetime with Zulu offset.

"1984-12-06"^^<http://www.w3.org/2001/XMLSchema#date>

"1984-12-06T09:00:00"^^<http://www.w3.org/2001/XMLSchema#dateTime>

"09:00:00"^^<http://www.w3.org/2001/XMLSchema#time>

"1984-12-06T09:00:00+01:00"^^<http://www.w3.org/2001/XMLSchema#dateTime>

Now we'll add them to the triple store:

conn.add(alice, birthdate, date);

conn.add(bob, birthdate, datetime);

conn.add(carol, birthdate, time);

conn.add(dave, birthdate, datetimeOffset);

And then retrieve them using getStatements():

getStatements() all birthdates. Four matches.

(http://people/dave, http://people/birthdate, "1984-12-06T08:00:00Z"^^<http://www.w3.org/2001/XMLSchema#dateTime>) [null]

(http://people/carol, http://people/birthdate, "09:00:00Z"^^<http://www.w3.org/2001/XMLSchema#time>) [null]

(http://people/bob, http://people/birthdate, "1984-12-06T09:00:00Z"^^<http://www.w3.org/2001/XMLSchema#dateTime>) [null]

(http://people/alice, http://people/birthdate, "1984-12-06"^^<http://www.w3.org/2001/XMLSchema#date>) [null]

If you look sharply, you'll notice that the zulu offset has been normalized:

Was:"1984-12-06T09:00:00+01:00"

Now:"1984-12-06T08:00:00Z"

Note that the one-hour zulu offset has been applied to the timestamp. "9:00" turned into "8:00."

Matching Date, Time, and Datetime Literals

"Match date." What happens if we search for the date literal we defined? We'll use the "date" variable with getStatements(), but just type the expected value into the SPARQL queries.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, birthdate, date, false) |

"1984-12-06"

^^<http://www.w3.org/2001/XMLSchema#date>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p '1984-12-06'^^<http://www.w3.org/2001/XMLSchema#date> |

"1984-12-06"

^^<http://www.w3.org/2001/XMLSchema#date> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o =

'1984-12-06'

^^<http://www.w3.org/2001/XMLSchema#date>)} |

"1984-12-06"

^^<http://www.w3.org/2001/XMLSchema#date> |

All three queries match narrowly, meaning the exact date and datatype we asked for is returned.

"Match datetime." What happens if we search for the datetime literal? We'll use the "datetime" variable with getStatements(), but just type the expected value into the SPARQL queries.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, birthdate, datetime, false) |

"1984-12-06T09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#dateTime>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p '1984-12-06T09:00:00Z'

^^<http://www.w3.org/2001/XMLSchema#dateTime> .} |

"1984-12-06T09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#dateTime> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = '1984-12-06T09:00:00Z'^^<http://www.w3.org/2001/XMLSchema#dateTime> |

"1984-12-06T09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#dateTime> |

The matches are specific for the exact date, time and type.

"Match time." What happens if we search for the time literal? We'll use the "time" variable with getStatements(), but just type the expected value into the SPARQL queries.

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, birthdate, time, false) |

"09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#time>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#time> .} |

"09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#time> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "09:00:00Z"^^<http://www.w3.org/2001/XMLSchema#time>)} |

"09:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#time> |

The matches are specific for the exact time and type.

"Match datetime with offset." What happens if we search for a datetime with zulu offset?

| Query Type |

Query |

Matches which types? |

| getStatements() |

conn.getStatements(null, birthdate, datetimeOffset, false) |

"1984-12-06T08:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#dateTime>

|

| SPARQL direct match |

SELECT ?s ?p WHERE {?s ?p "1984-12-06T09:00:00+01:00"

^^<http://www.w3.org/2001/XMLSchema#dateTime> .} |

"1984-12-06T08:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#dateTime> |

| SPARQL filter match |

SELECT ?s ?p ?o WHERE {?s ?p ?o . filter (?o = "1984-12-06T09:00:00+01:00"^^<http://www.w3.org/2001/XMLSchema#dateTime>)} |

"1984-12-06T08:00:00Z"

^^<http://www.w3.org/2001/XMLSchema#dateTime> |

Note that we searched for "1984-12-06T09:00:00+01:00" but found "1984-12-06T08:00:00Z". It is the same moment in time.

Importing Triples (example6() and example7()) Return to Top

The Java Sesame API client can load triples in either RDF/XML format

or NTriples format. The example below calls the connection

object's add() method to load an NTriples file,

and addFile() to load an RDF/XML file. Both methods

work, but the best practice is to use addFile().

| Note: If you get a "file not found" error while running this example, it means that Java is looking in the wrong directory for the data files to load. The usual explanation is that you have moved the TutorialExamples.java file to an unexpected directory. You can clear the issue by putting the data files in the same directory as TutorialExamples.java. |

The RDF/XML file contains a short list of v-cards (virtual business cards), like this one:

<rdf:Description rdf:about="http://somewhere/JohnSmith/">

<vCard:FN>John Smith</vCard:FN>

<vCard:N rdf:parseType="Resource">

<vCard:Family>Smith</vCard:Family>

<vCard:Given>John</vCard:Given>

</vCard:N>

</rdf:Description>

The NTriples file contains a graph of resources describing the Kennedy family, the places where they were each born, their colleges, and their professions. A typical entry from that file looks like this:

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#first-name> "Joseph" .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#middle-initial> "Patrick" .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#last-name> "Kennedy" .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#suffix> "none" .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#alma-mater> <http://www.franz.com/simple#Harvard> .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#birth-year> "1888" .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#death-year> "1969" .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#sex> <http://www.franz.com/simple#male> .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#spouse> <http://www.franz.com/simple#person2> .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#has-child> <http://www.franz.com/simple#person3> .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#profession> <http://www.franz.com/simple#banker> .

<http://www.franz.com/simple#person1> <http://www.franz.com/simple#birth-place> <http://www.franz.com/simple#place5> .

<http://www.franz.com/simple#person1> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.franz.com/simple#person> .

Note that AllegroGraph can segregate triples into contexts (subgraphs) by treating them as quads, but the NTriples and RDF/XML formats can not include context information. They deal with triples only, so there is no place to store a fourth field in those formats. In the case of the add() call, we have omitted the context

argument so the triples are loaded the default background graph (sometimes called the "null context.")

The

addFile() call includes an explicit context setting, so the fourth argument of

each vcard triple will be the context named "/tutorial/vc_db_1_rdf".

The connection size() method takes an optional context argument. With no

argument, it returns the total number of triples in the repository. Below, it returns the number

'16' for the named subgraph, and the number '28' for the null context

(None) argument.

The example6() function of TutorialExamples.java creates a transaction connection to AllegroGraph, using methods you have seen before, plus the repositoryConnection object's setAutoCommit() method:

public static AGRepositoryConnection example6() throws Exception {

AGServer server = new AGServer(SERVER_URL, USERNAME, PASSWORD);

AGCatalog catalog = server.getCatalog(CATALOG_ID);

AGRepository myRepository = catalog.createRepository(REPOSITORY_ID);

myRepository.initialize();

AGRepositoryConnection conn = myRepository.getConnection();

closeBeforeExit(conn);

conn.clear();

conn.setAutoCommit(false); // transaction session

ValueFactory f = myRepository.getValueFactory();

The transaction session is not immediately pertinent to the examples in this section, but will become important in later examples that reuse this connection to demonstrate Prolog Rules and Social Network Analysis.

The variables path1 and path2 are bound to the RDF/XML and NTriples files, respectively. The data files are in the same directory as TutorialExamples.java. If your data files are in another directory, adjust the DATA_DIR constant.

File path1 = new File(DATA_DIR, "java-vcards.rdf");

File path2 = new File(DATA_DIR = "java-kennedy.ntriples");

Both examples need a base URI as one of the required arguments to the asserting methods:

String baseURI = "http://example.org/example/local";

The NTriples about the vcards will be added to a specific context, so naturally we need a URI to identify that context.

URI context = f.createURI("http://example.org#vcards");

In the next step we use add() to load the vcard

triples into the #vcards context:

conn.add(new File(path1), baseURI, RDFFormat.RDFXML, context);

Then we use add() to load the Kennedy family tree

into the null context:

conn.add(new File(path2), baseURI, RDFFormat.NTRIPLES);

Now we'll ask AllegroGraph to report on how many triples it sees in the null context and in the #vcards context:

println("After loading, repository contains " + conn.size(context) +

" vcard triples in context '" + context + "'\n and " +

conn.size((Resource)null) + " kennedy triples in context 'null'.");

The output of this report was:

After loading, repository contains 16 vcard triples in context 'http://example.org#vcards'

and 1214 kennedy triples in context 'null'.

Example7() borrows the same triples we loaded in example6(), above, and runs two unconstrained retrievals. The first uses getStatement, and prints out the subject URI and context of each triple.

public static void example7() throws Exception {

RepositoryConnection conn = example6(false);

println("\nMatch all and print subjects and contexts");

RepositoryResult<Statement> result = conn.getStatements(null, null, null, false);

for (int i = 0; i < 25 && result.hasNext(); i++) {

Statement stmt = result.next();

println(stmt.getSubject() + " " + stmt.getContext());

}

result.close();

This loop prints out a mix of triples from the null context and from the #vcards context. In this case the output contained the 16 v-card triples plus another nine from the Kennedy data. We set a limit of 25 triples on the output because the Kennedy dataset contains over a thousand triples.

The following loop, however, does not produce the same results. This is a SPARQL query that should match all available triples, printing out the subject and context of each triple. We limited this query by using the DISTINCT keyword. Otherwise there would be many duplicate results.

println("\nSame thing with SPARQL query (can't retrieve triples in the null context)");

String queryString = "SELECT DISTINCT ?s ?c WHERE {graph ?c {?s ?p ?o .} }";

TupleQuery tupleQuery = conn.prepareTupleQuery(QueryLanguage.SPARQL, queryString);

TupleQueryResult qresult = tupleQuery.evaluate();

while (qresult.hasNext()) {

BindingSet bindingSet = qresult.next();

println(bindingSet.getBinding("s") + " " + bindingSet.getBinding("c"));

}

qresult.close();

conn.close();

In this case, the loop prints out only v-card triples from the #vcards context. The SPARQL query is not able to access the null context when a named context is also present.

Exporting Triples (example8() and example9()) Return to Top

The next examples show how to write triples out to a file in either NTriples format or RDF/XML format. The output of either format may be optionally redirected to standard output (the Java command window) for inspection.

Example example8() begins by obtaining a connection object from example6(). This means the repository contains v-card triples in the #vcards context, and Kennedy family tree triples in the null context (the default graph).

public static void example8() throws Exception {

RepositoryConnection conn = example6(false);

Repository myRepository = conn.getRepository();

In this example, we'll export the triples in the #vcards context.

URI context = myRepository.getValueFactory().createURI("http://example.org#vcards");

To write triples in

NTriples format, call NTriplesWriter(). You have to a give it an output stream, which could be either a file path or standard output. The code below gives you the choice of writing to a file or to the interaction window.

String outputFile = "/tmp/temp.nt";

// outputFile = null;

if (outputFile == null) {

println("\nWriting n-triples to Standard Out instead of to a file");

} else {

println("\nWriting n-triples to: " + outputFile);

}

OutputStream output = (outputFile != null) ? new FileOutputStream(outputFile) : System.out;

NTriplesWriter ntriplesWriter = new NTriplesWriter(output);

conn.export(ntriplesWriter, context);

To write triples in RDF/XML format, call RDFXMLWriter().

String outputFile2 = "/tmp/temp.rdf";

outputFile2 = null;

if (outputFile2 == null) {

println("\nWriting RDF to Standard Out instead of to a file");

} else {

println("\nWriting RDF to: " + outputFile2);

}

output = (outputFile2 != null) ? new FileOutputStream(outputFile2) : System.out;

RDFXMLWriter rdfxmlfWriter = new RDFXMLWriter(output);

conn.export(rdfxmlfWriter, context);

output.write('\n');

conn.close();

The export() method writes

out all triples in one or more contexts. This provides a convenient means for making

local backups of sections of your RDF store. If two or more contexts are specified,

then triples from all of those contexts will be written to the same file. Since the

triples are "mixed together" in the file, the context information is not recoverable. If the context argument is omitted, all triples in the store are written out, and again all context information is lost.

Finally, if the objective is to write out a filtered set of triples,

the exportStatements() method can be used. The example below (from example9()) writes

out all RDF:TYPE declaration triples to standard output.

conn.exportStatements(null, RDF.TYPE, null, false, new RDFXMLWriter(System.out));

Searching Multiple Graphs (example10()) Return to Top

We have already seen contexts (subgraphs) at work when loading and saving files. In example10() we provide more realistic examples of contexts, and we explore the FROM, FROM DEFAULT, and FROM NAMED clauses of a SPARQL query to see how they interact with multiple subgraphs in the triple store. Finally, we will introduce the dataset object. A dataset is a list of contexts that should all be searched simultaneously. It is an object for use with SPARQL queries.

To set up the example, we create six statements, and add two of each to three different contexts: context1, context2, and the null context. The process of setting up the six statements follows the same pattern as we used in the previous examples:

String exns = "http://example.org/people/";

// Create URIs for resources, predicates and classes.

URI alice = f.createURI(exns, "alice");

URI bob = f.createURI(exns, "bob");

URI ted = f.createURI(exns, "ted");

URI person = f.createURI("http://example.org/ontology/Person");

URI name = f.createURI("http://example.org/ontology/name");

// Create literal name values.

Literal alicesName = f.createLiteral("Alice");

Literal bobsName = f.createLiteral("Bob");

Literal tedsName = f.createLiteral("Ted");

// Create URIs to identify the named contexts.

URI context1 = f.createURI(exns, "context1");

URI context2 = f.createURI(exns, "context2");

The next step is to assert two triples into each of three contexts.

// Assemble new statements and add them to the contexts.

conn.add(alice, RDF.TYPE, person, context1);

conn.add(alice, name, alicesName, context1);

conn.add(bob, RDF.TYPE, person, context2);

conn.add(bob, name, bobsName, context2);

conn.add(ted, RDF.TYPE, person);

conn.add(ted, name, tedsName);

Note that the final two statements (about Ted) were added to the null context (the unnamed default graph).

GetStatements

The first test uses getStatements() to return all triples in all contexts (context1, context2, and null).

This is default search behavior, so there is no need to specify the contexts in either the conn.getStatements() method. Note that conn.size() also reports on all contexts by default.

RepositoryResult<Statement> statements = conn.getStatements(null, null, null, false);

println("\nAll triples in all contexts: " + (conn.size()));

while (statements.hasNext()) {

println(statements.next());

}

The output of this loop is shown below. The context URIs are in the fourth position. Triples from the default graph have [null] in the fourth position.

All triples in all contexts: 6

(http://example.org/people/alice, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [http://example.org/people/context1]

(http://example.org/people/alice, http://example.org/ontology/name, "Alice") [http://example.org/people/context1]

(http://example.org/people/bob, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [http://example.org/people/context2]

(http://example.org/people/bob, http://example.org/ontology/name, "Bob") [http://example.org/people/context2]

(http://example.org/people/ted, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [null]

(http://example.org/people/ted, http://example.org/ontology/name, "Ted") [null]

The next match explicitly lists 'context1' and 'context2' as the only contexts to participate in the match. It returns four statements. The conn.size() method can also address individual contexts.

statements = conn.getStatements(null, null, null, false, context1, context2);

println("\nTriples in contexts 1 or 2: " + (conn.size(context1) + conn.size(context2)));

while (statements.hasNext()) {

println(statements.next());

}

The output of this loop shows that the triples in the null context have been excluded.

Triples in contexts 1 or 2: 4

(http://example.org/people/bob, http://example.org/ontology/name, "Bob") [http://example.org/people/context2]

(http://example.org/people/bob, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [http://example.org/people/context2]

(http://example.org/people/alice, http://example.org/ontology/name, "Alice") [http://example.org/people/context1]

(http://example.org/people/alice, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [http://example.org/people/context1]

This time we use getStatements() to search explicitly for triples in the null context or in context 2. Note that you can use conn.size() to report on the null context alone, if you define null to be a resource as shown here.

statements = conn.getStatements(null, null, null, false, null, context2);

println("\nTriples in contexts null or 2: " + (conn.size((Resource)null) + conn.size(context2)));

while (statements.hasNext()) {

println(statements.next());

}

The output of this loop is:

Triples in contexts null or 2: 4

(http://example.org/people/bob, http://example.org/ontology/name, "Bob") [http://example.org/people/context2]

(http://example.org/people/bob, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [http://example.org/people/context2]

(http://example.org/people/ted, http://example.org/ontology/name, "Ted") [null]

(http://example.org/people/ted, http://www.w3.org/1999/02/22-rdf-syntax-ns#type, http://example.org/ontology/Person) [null]

The lesson is that getStatements() can freely mix triples from the null context and named contexts. It is all you need as long as the query is a very simple one.

SPARQL using FROM and FROM NAMED

In many of our examples we have used a simple SPARQL query to retrieve triples from AllegroGraph's default graph. This has been very convenient but it is also misleading. As soon as we tell SPARQL to search a specific graph, we lose the ability to search AllegroGraph's default graph! Triples from the null graph vanish from the search results. Why is that?

- It is important to understand that AllegroGraph and SPARQL use the phrase "default graph" to identify two very different things. AllegroGraph's default graph, or null context, is simply the set of all triples that have "null" in the fourth field of the "triple." The "default graph" is an unnamed subgraph of the AllegroGraph triple store.

- SPARQL uses "default graph" to describe something that is very different. In SPARQL, the "default graph" is a temporary pool of triples imported from one or more "named" graphs. SPARQL's "default graph" is constructed and discarded in the service of a single query.

Standard SPARQL was designed for named graphs only, and has no syntax to indentify a truly unnamed graph. AllegroGraph's SPARQL, however, has been extended to allow the unnamed graph to participate in multi-graph queries.

We can use AllegroGraph's SPARQL to search specific subgraphs in three ways. We can create a temporary "default graph" using the FROM operator; we can put AllegroGraph's unnamed graph into SPARQL's default graph using FROM DEFAULT; or we can target specific named graphs using the FROM NAMED operator.

We can use SPARQL to search specific subgraphs in two ways. We can create a temporary "default graph" using the FROM operator, or we can target specific named graphs using the FROM NAMED operator.

- FROM takes one (or more) subgraphs identified by their URIs and temporarily turns them into a single default graph. We match triples in this graph using simple (non-GRAPH) patterns.

- FROM DEFAULT takes AllegroGraph's null graph and temporarily makes it part of SPARQL's default graph. Again, we use simple patterns to match this graph.

- FROM NAMED takes one (or more) named graphs, and declares them to be named graphs in the query. Named graphs must be addressed using explicit GRAPH patterns.

We can also combine these operators in a single query, to search the SPARQL default graph and one or more named graphs at the same time.